The reason for this is that in order to estimate build time, you need to know how both the slicer and scanning strategy work - as well as mechanical factors like scanning speed and recoating time. And while certain machine manufacturers (see below) share this information with Materialise, for many it simply isn't worth it. They see those process parameters as valuable, and don't see the benefit of sharing that data with a third party software developer. Moreover, most of them can provide very accurate build time estimations in their own software, and the manufacturing engineers that use the machines take it as given that they need to use that at some point in the process anyway.

This strikes me as a big failing. Magics needs a way of sharing data about their builds: a public repository of machine parameters and build times. Without that - or without, on the other hand, convincing the machine manufacturers to share that data themselves - Magics is left with a huge disconnect between the build setup and the end product. This undercuts Magics' claim to be "The link between your CAD file and the printed part." If it lacks basic data on build speed for the most common machines in the industry, what exactly is it linking to?

So: As of the time I'm writing this, I've got emails out to a handful of the biggest metal powder bed fusion machine manufacturers in the industry, asking for Magics learning platforms. If anyone out there can share that data with me, please send me a note!

Build Processors

My demo doesn't include these, but they're worth touching on. For a few big machine manufacturers (Renishaw, SLM, and EOS), Materialise has developed build processors that are tuned to those machines' capabilities and specifications. Presumably, these companies provide Materialise with in-depth data about how their machines work, some of which is either patented or proprietary. Materialise then builds software modules that, through a few intermediate steps (the most notable of which are slicing and subdividing/hatching), produce a job file that can go directly to a machine.

Materialise bills the build processors as reducing complexity in the manufacturing life cycle, and allowing both Materialise and the machine manufacturers "to focus on their core competencies." Having not played with them myself, I can't really comment. I hope to learn more soon.

A few things Magics *can't* do

To reiterate: It's my impression that Materialise built Magics to fill a really big hole in the existing work chain, and the bottom line is that that work chain is something that no single party (let alone Materialise) created. It's also, in my opinion, *not* the right work chain for the future of additive manufacturing, and Magics' role in it highlights a lot of the problems in the industry today. Here are a few things that I noticed that Magics can't, for various obscure and not-so-obscure reasons (many of which are decidedly *not* Materialise's fault), do.

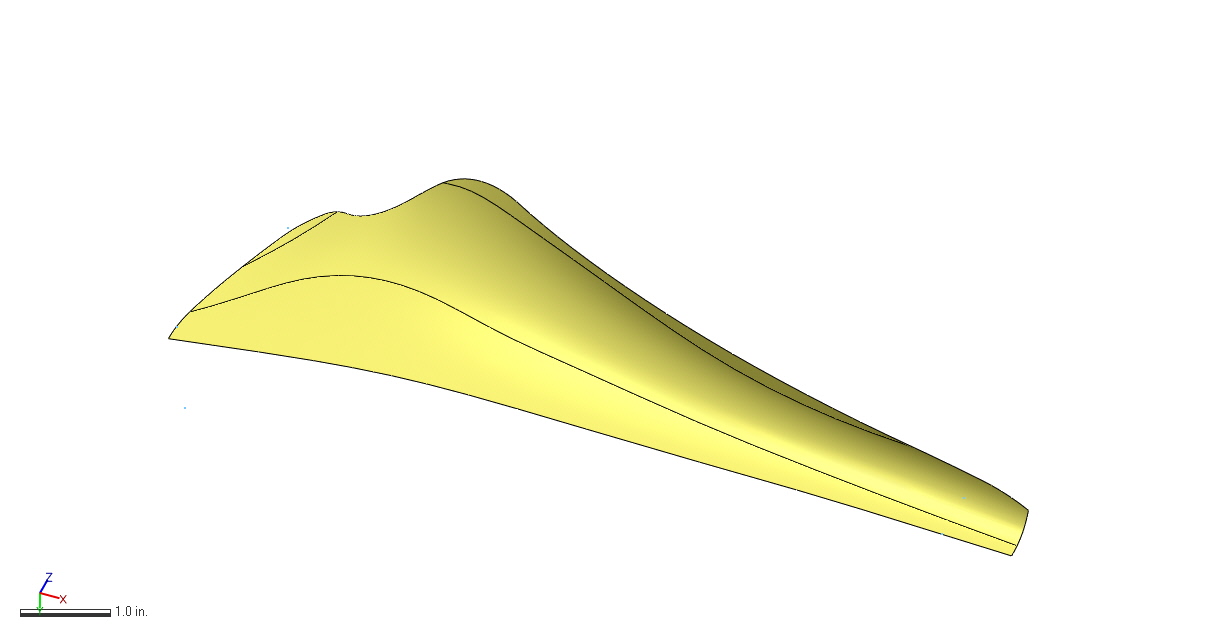

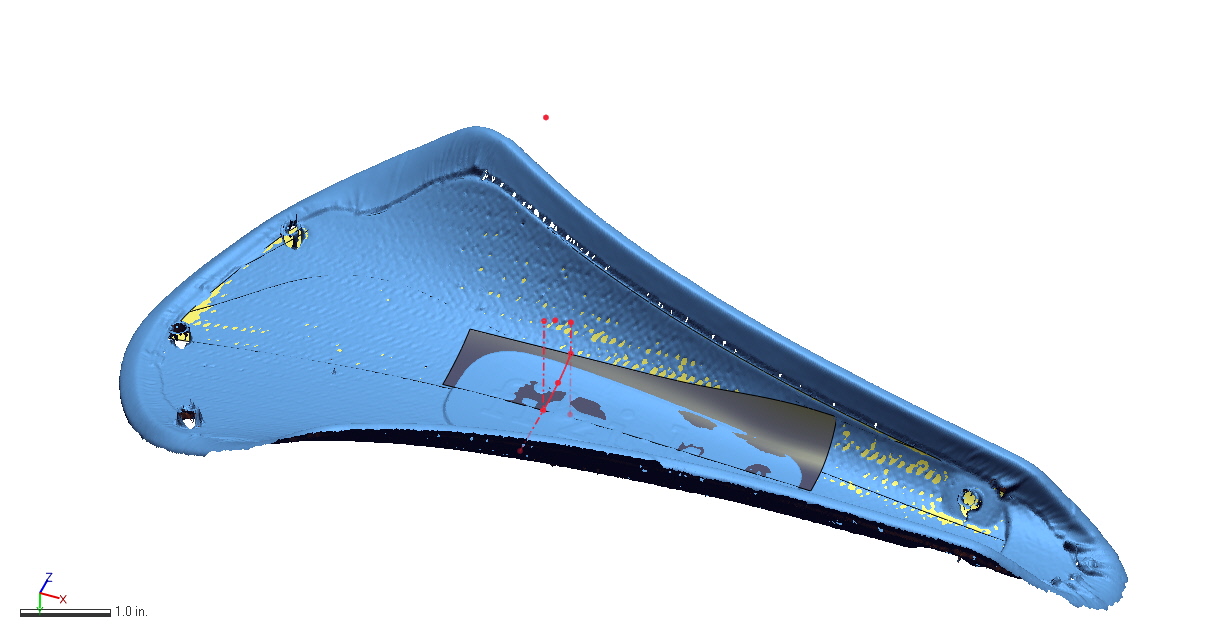

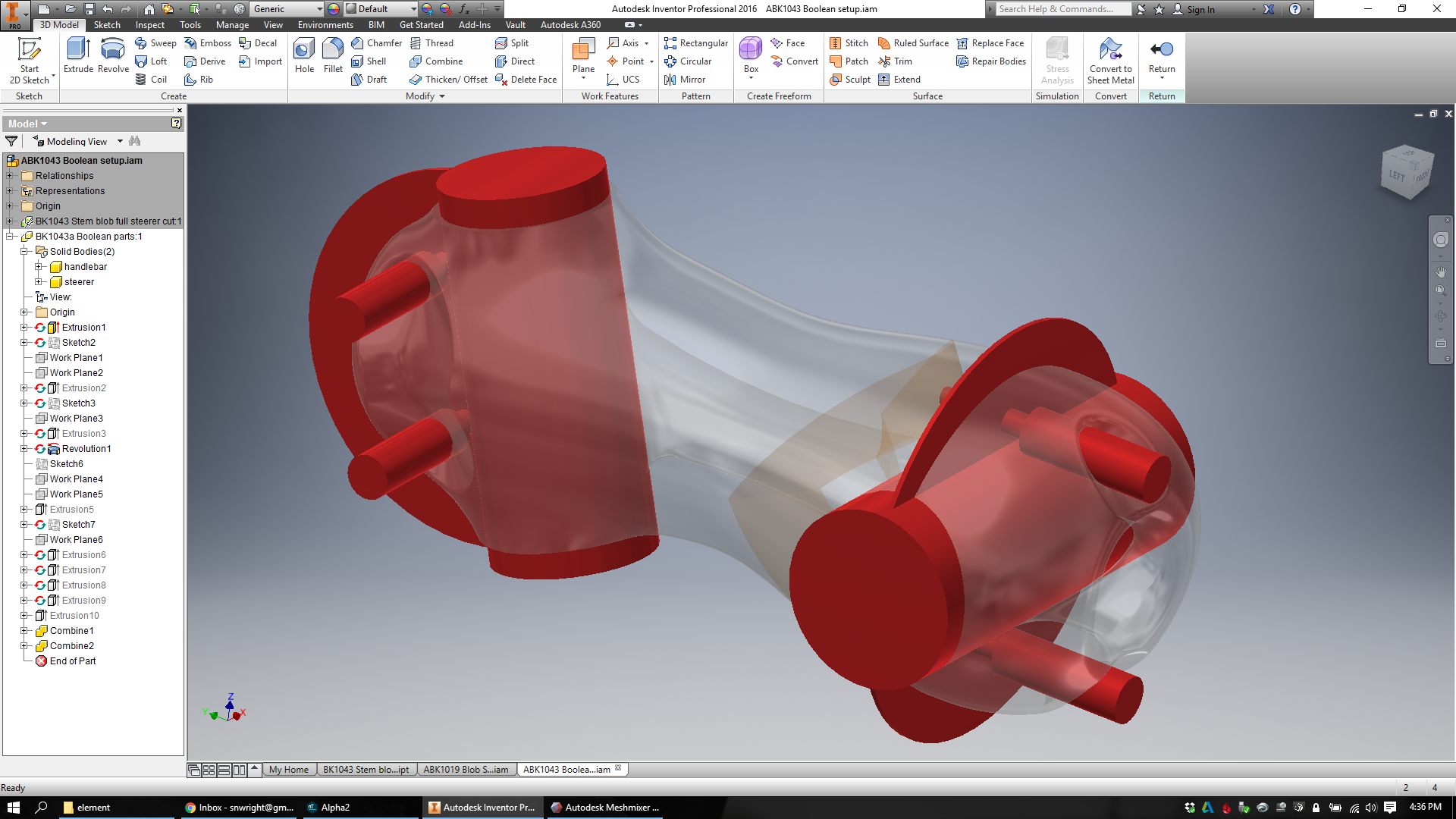

Understand the underlying design

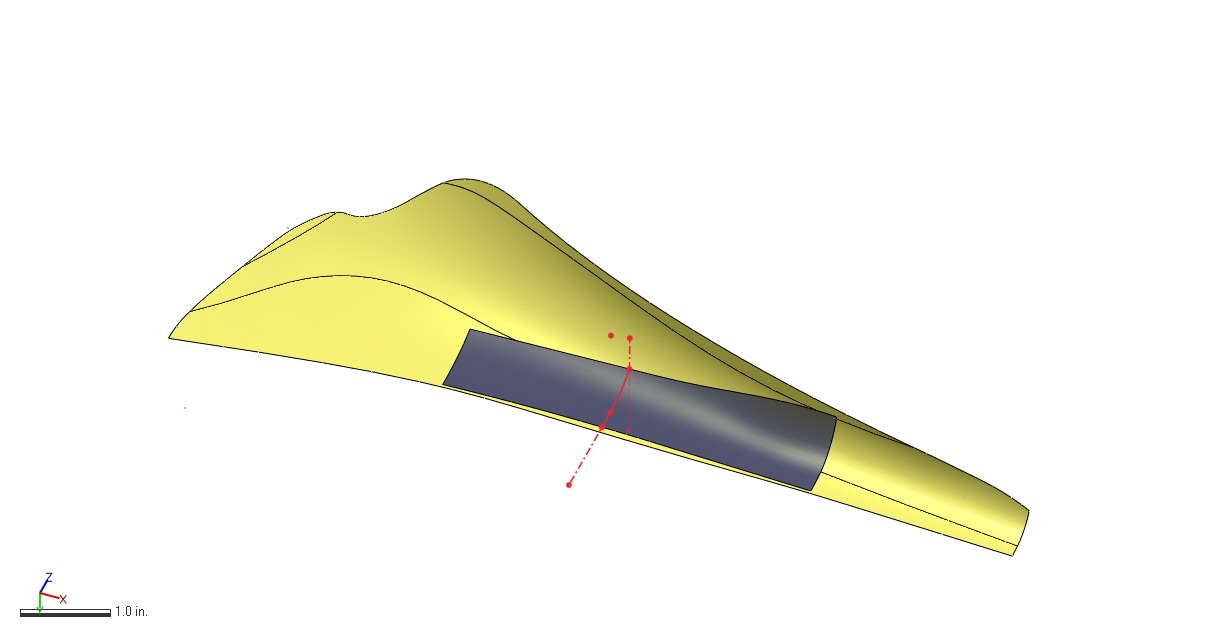

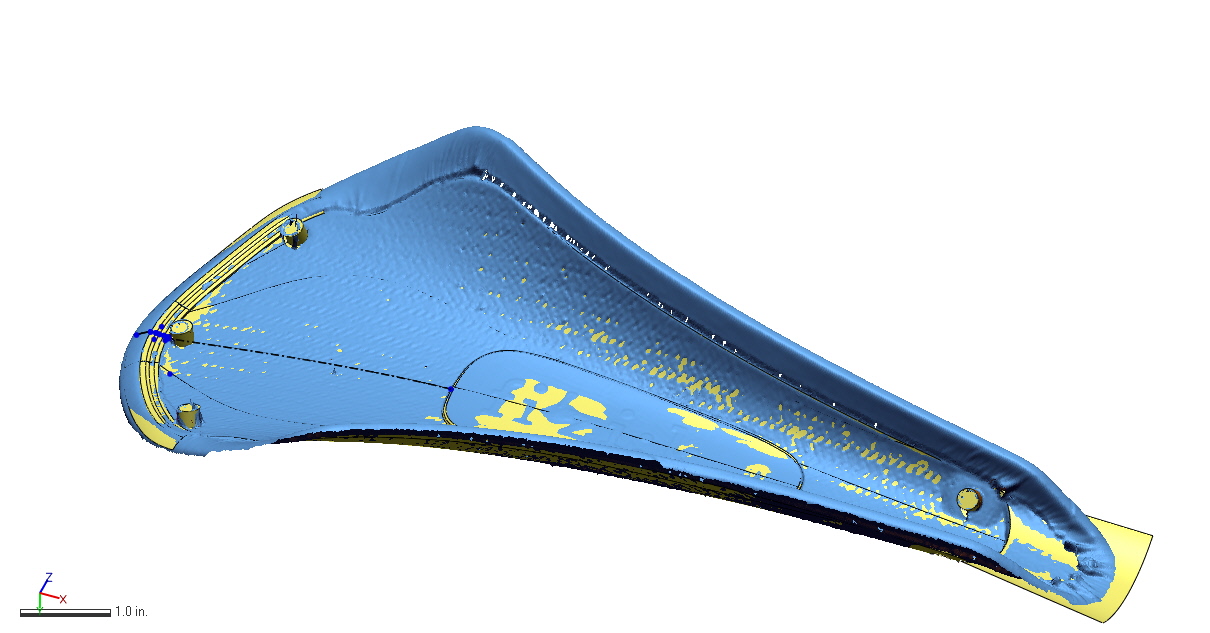

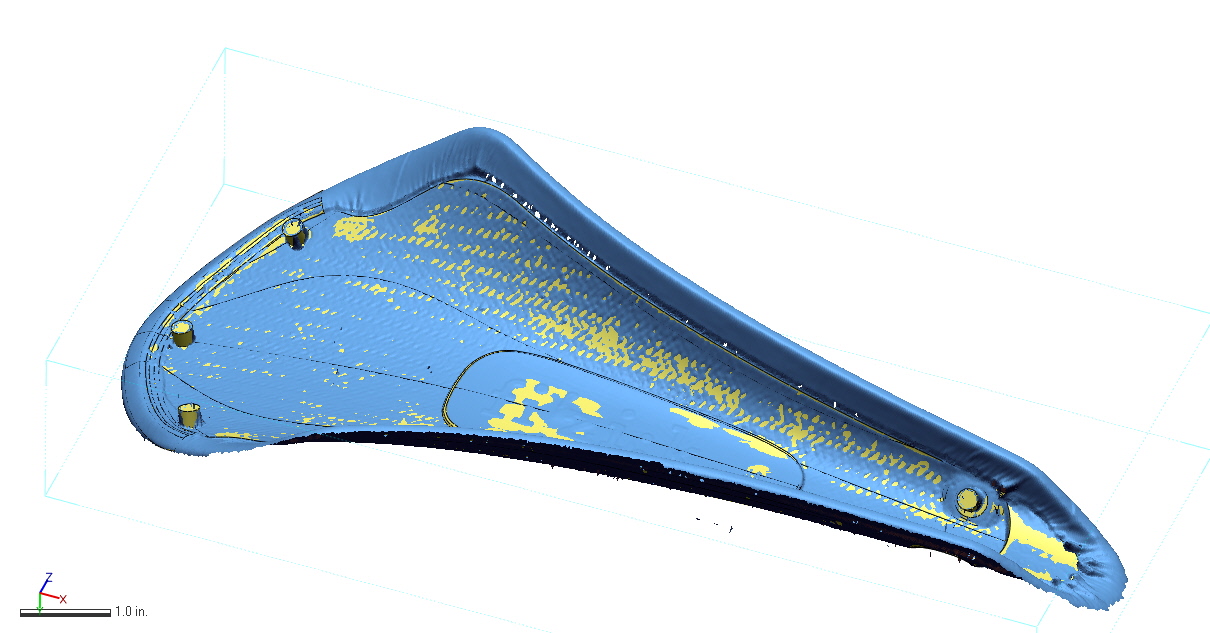

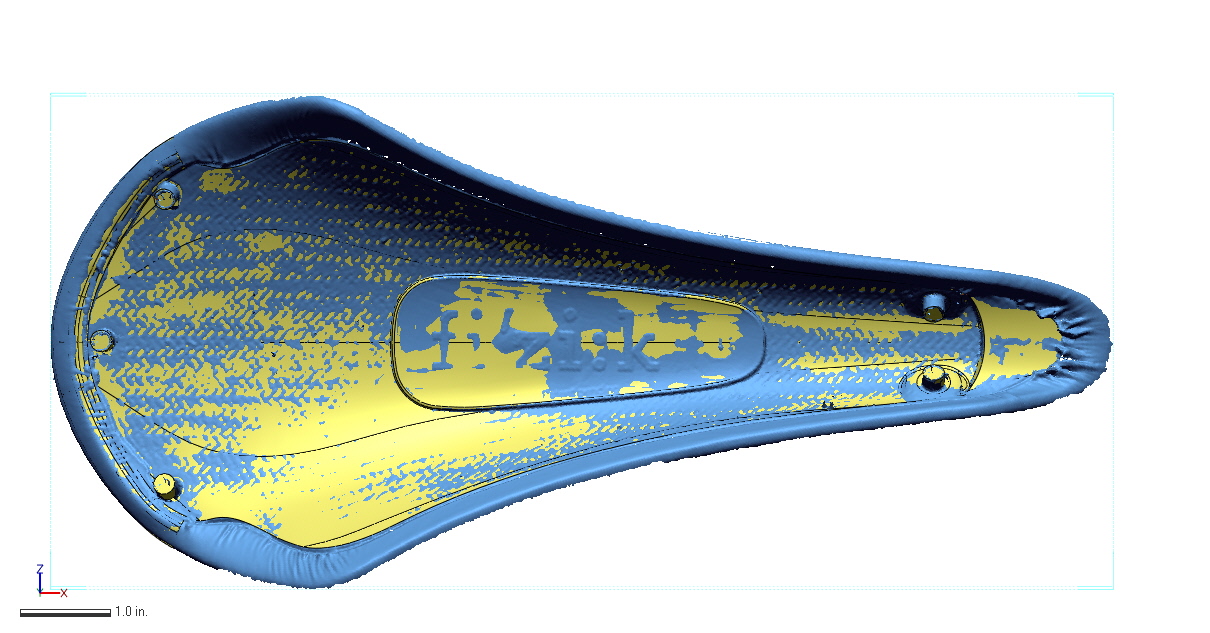

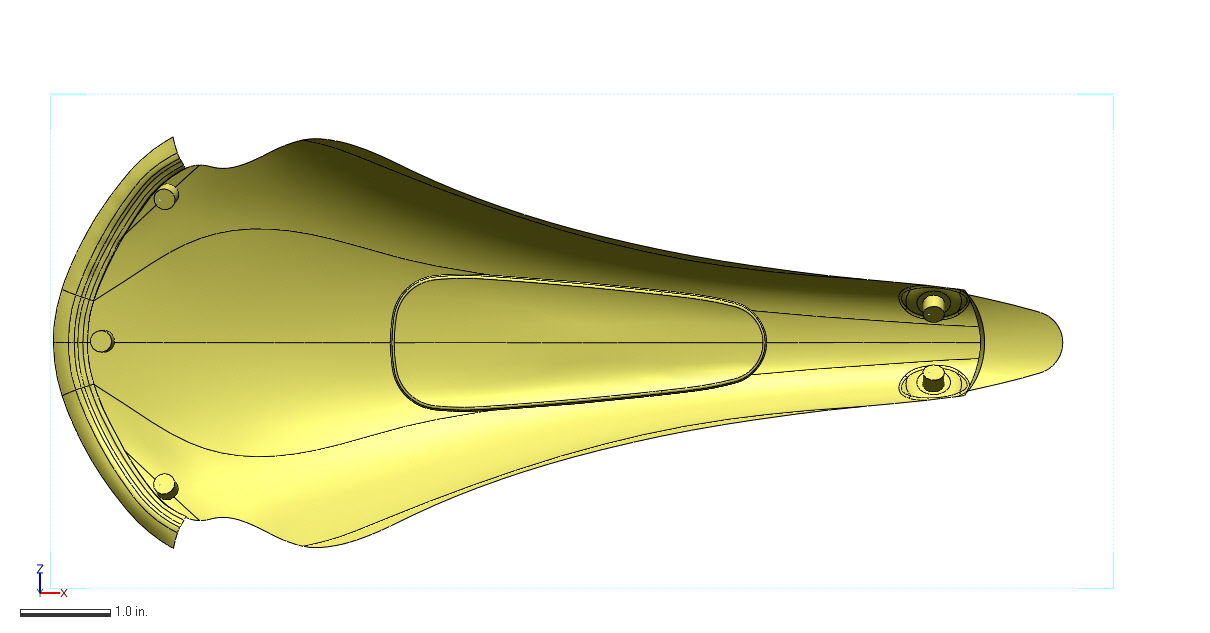

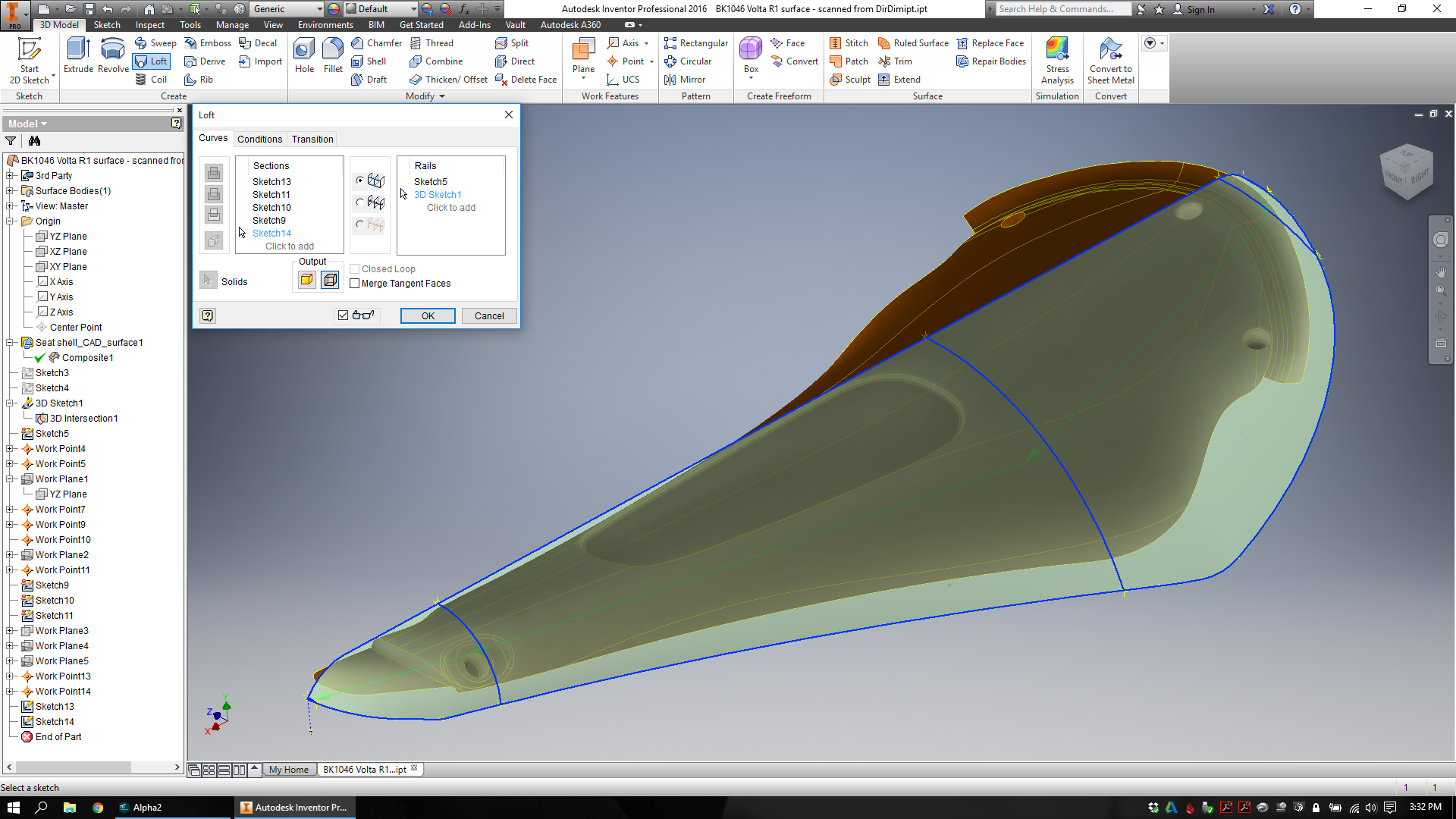

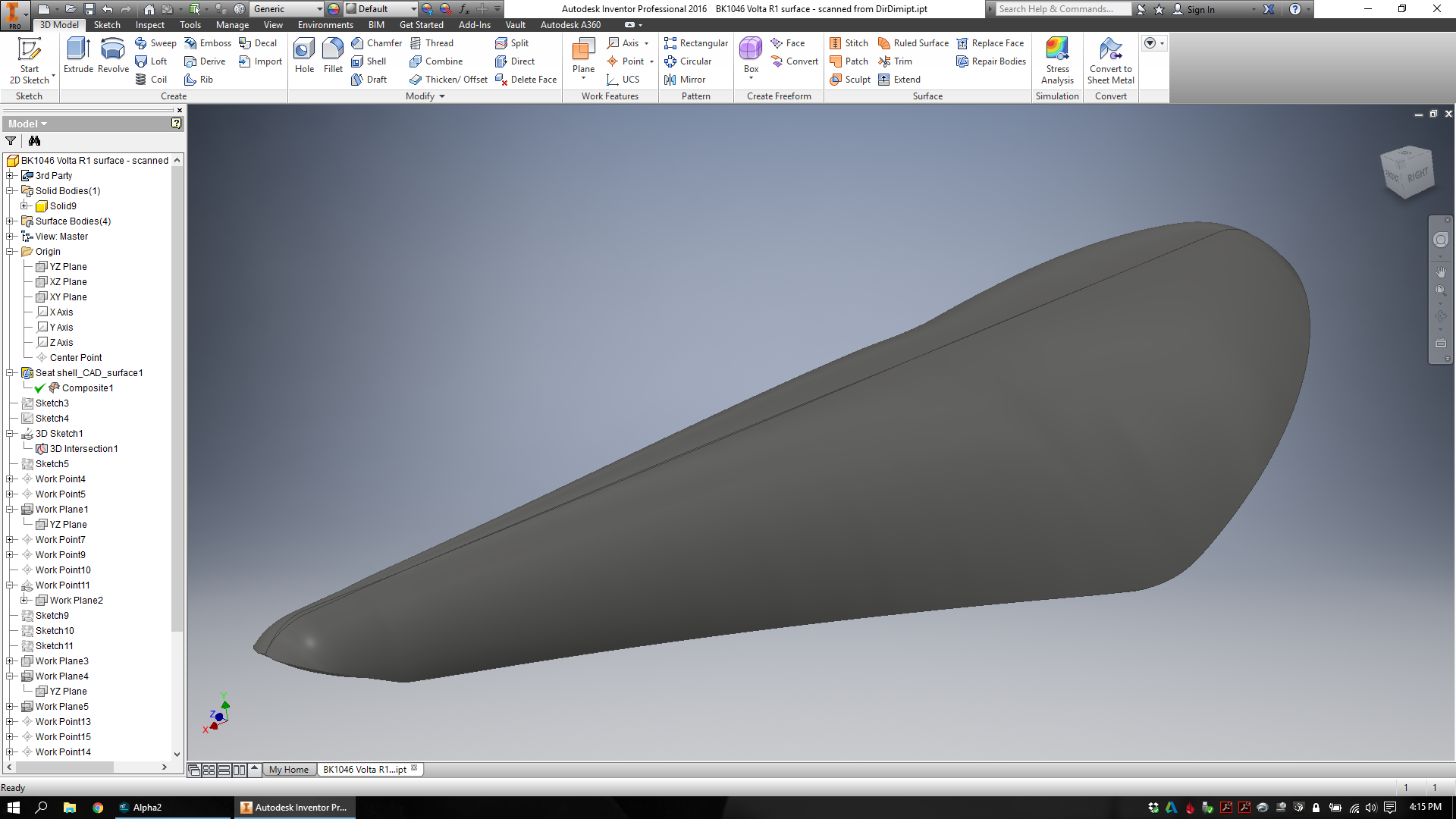

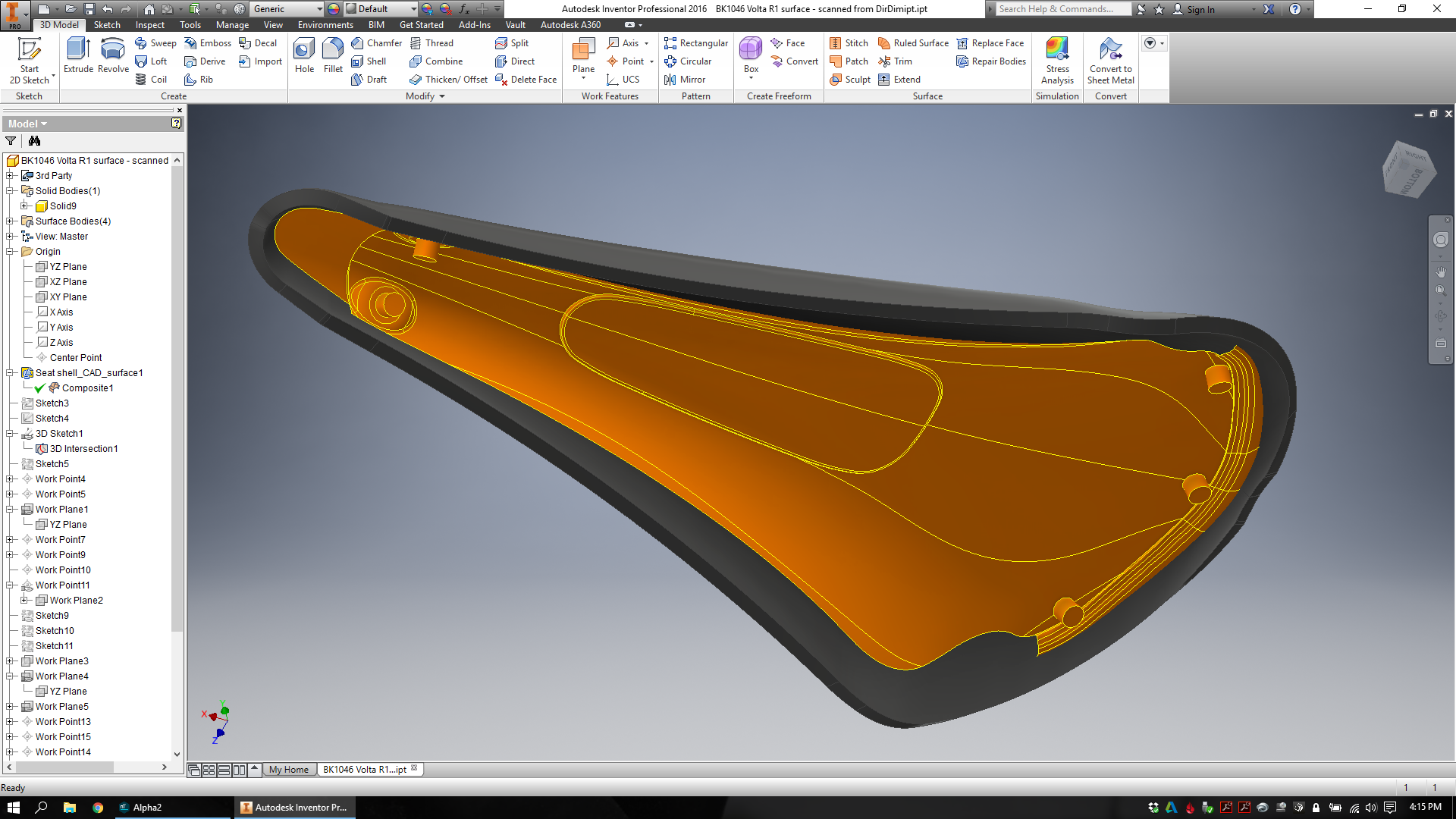

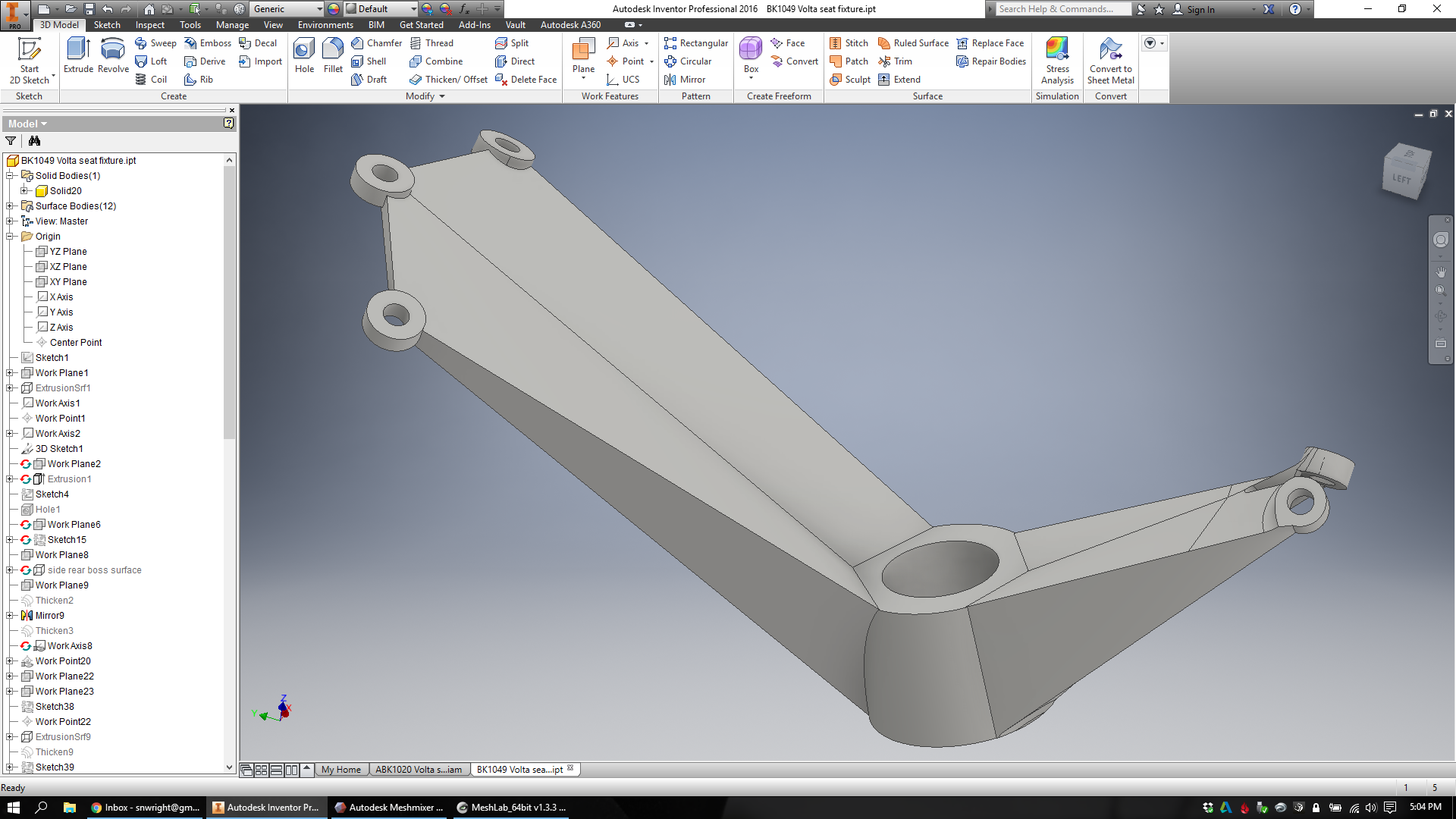

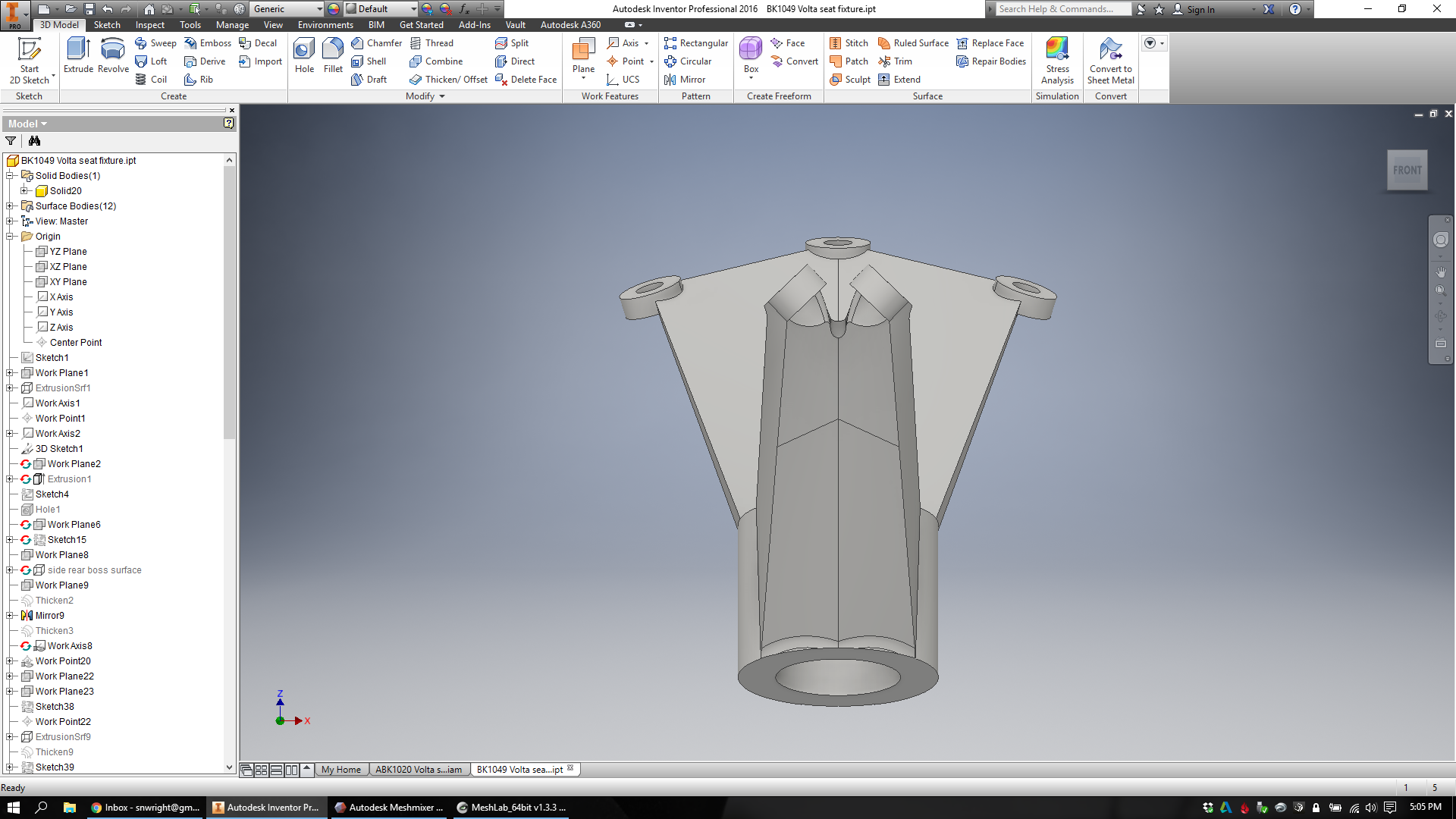

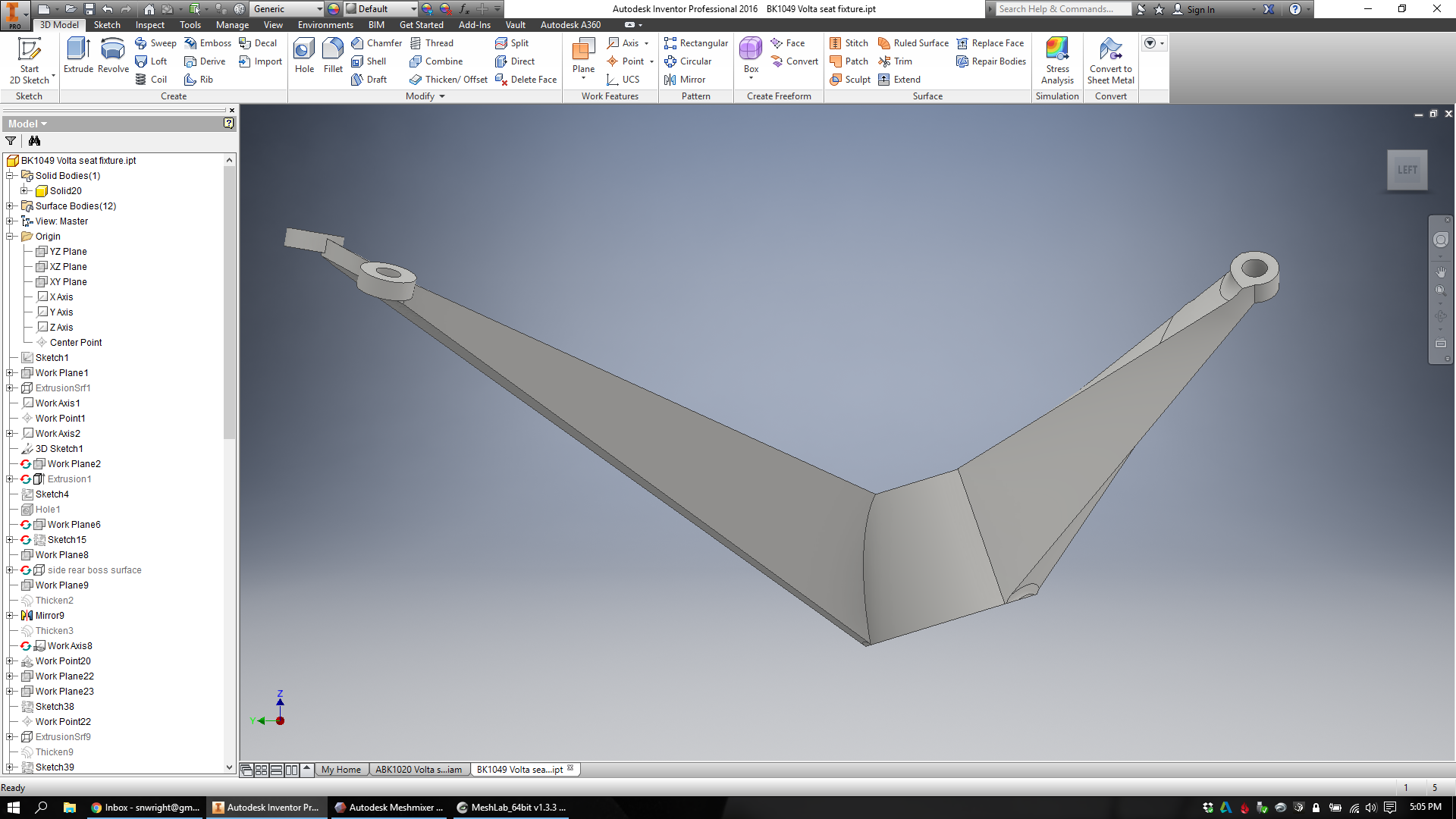

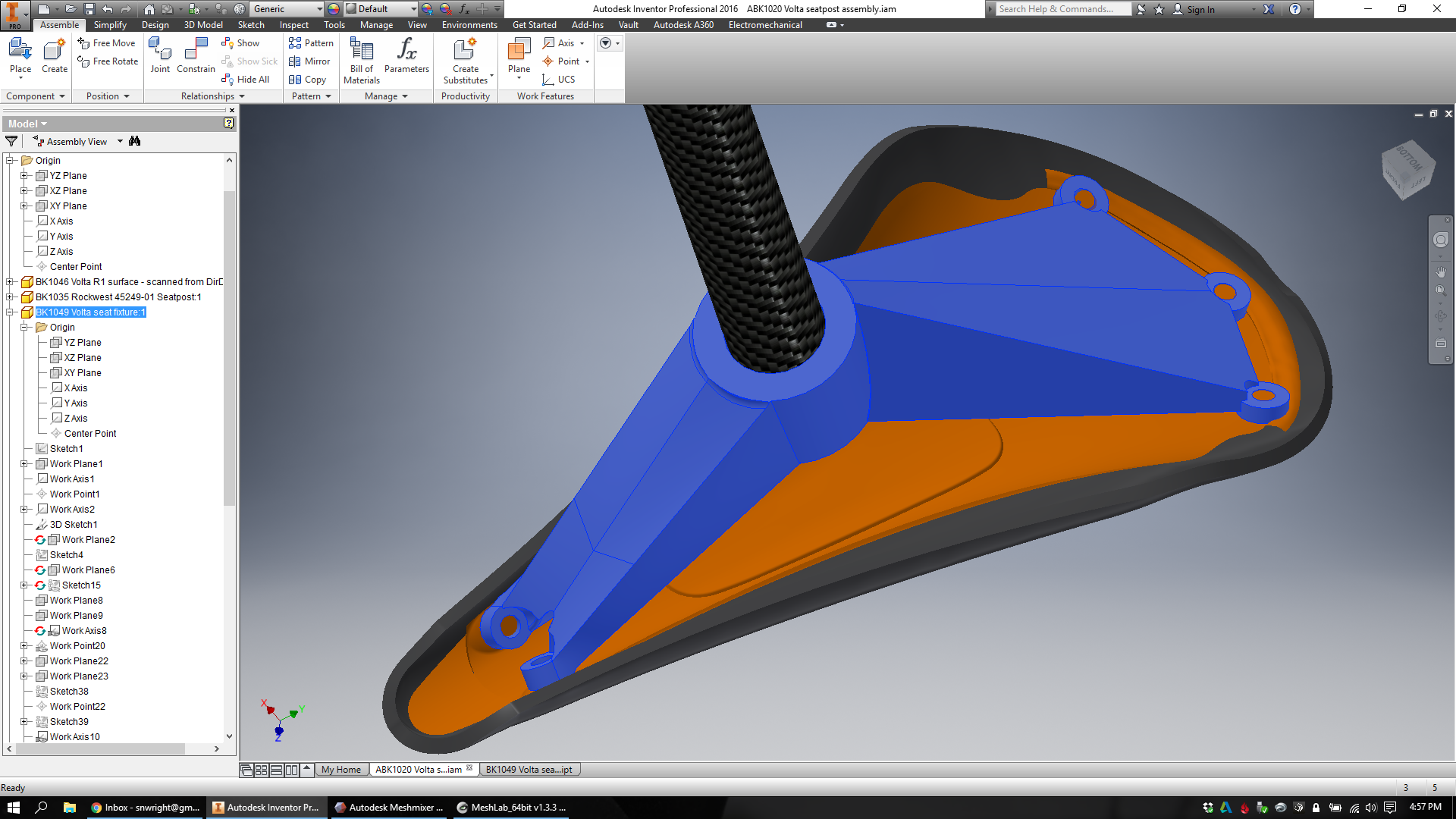

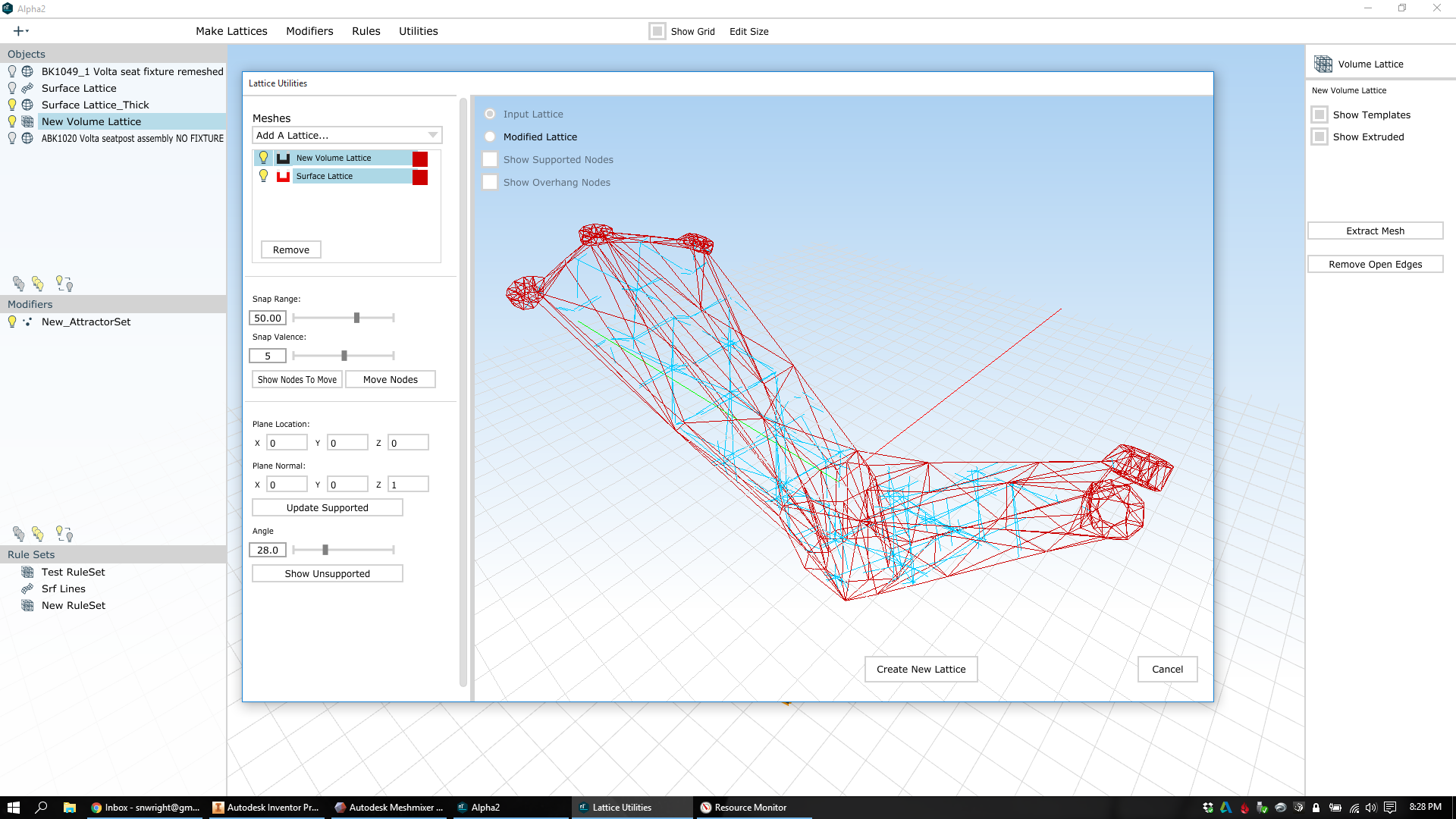

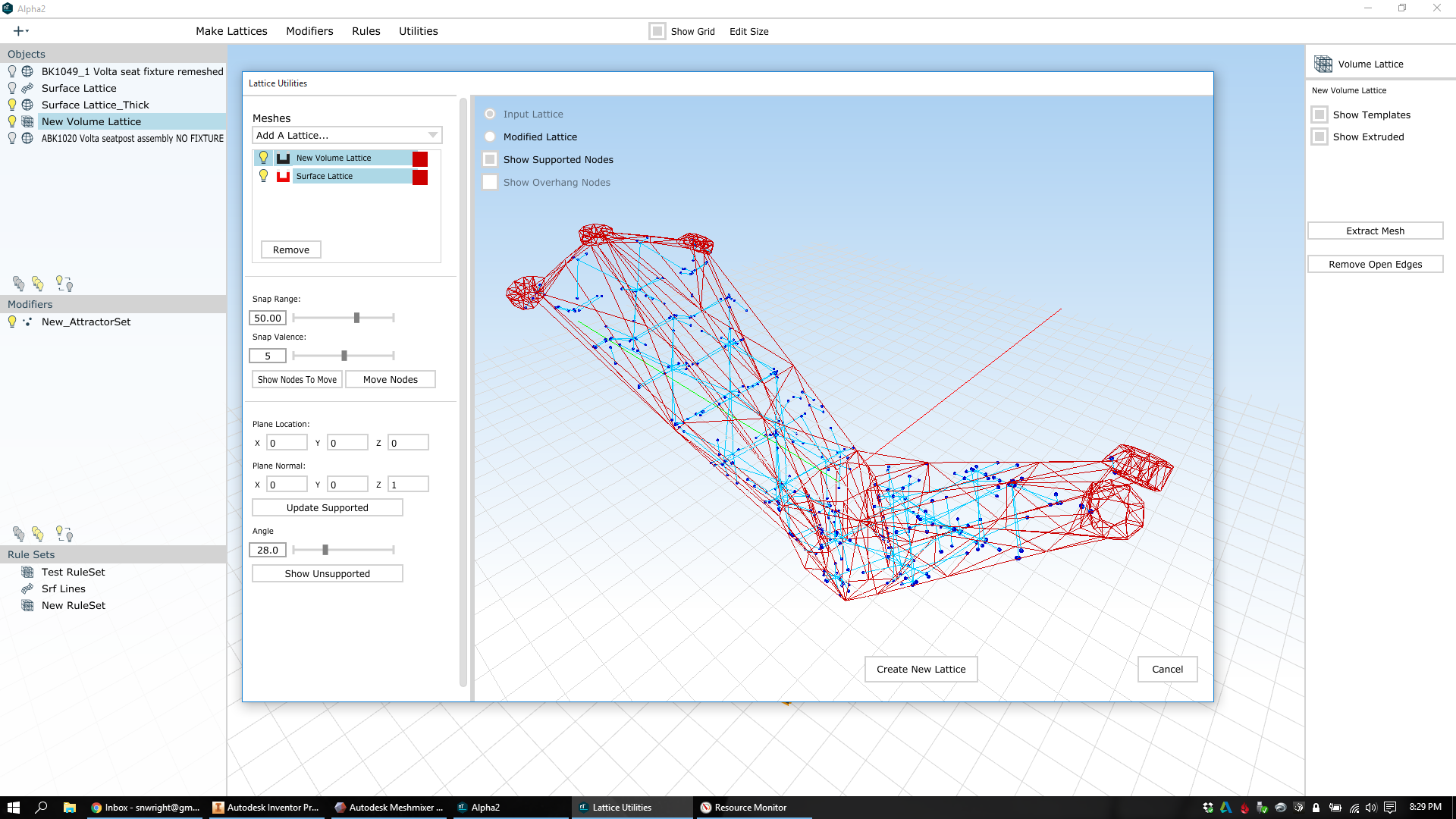

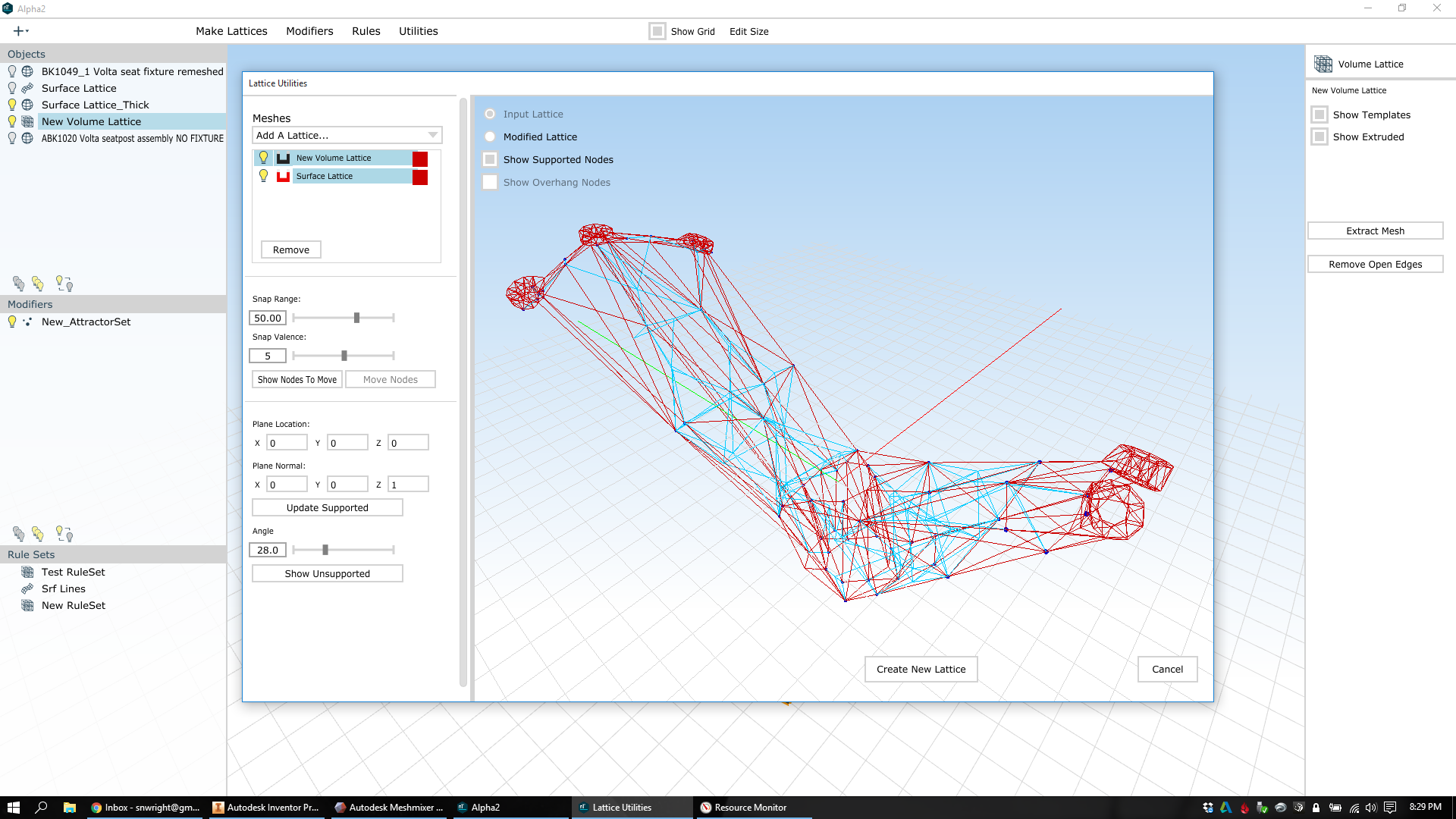

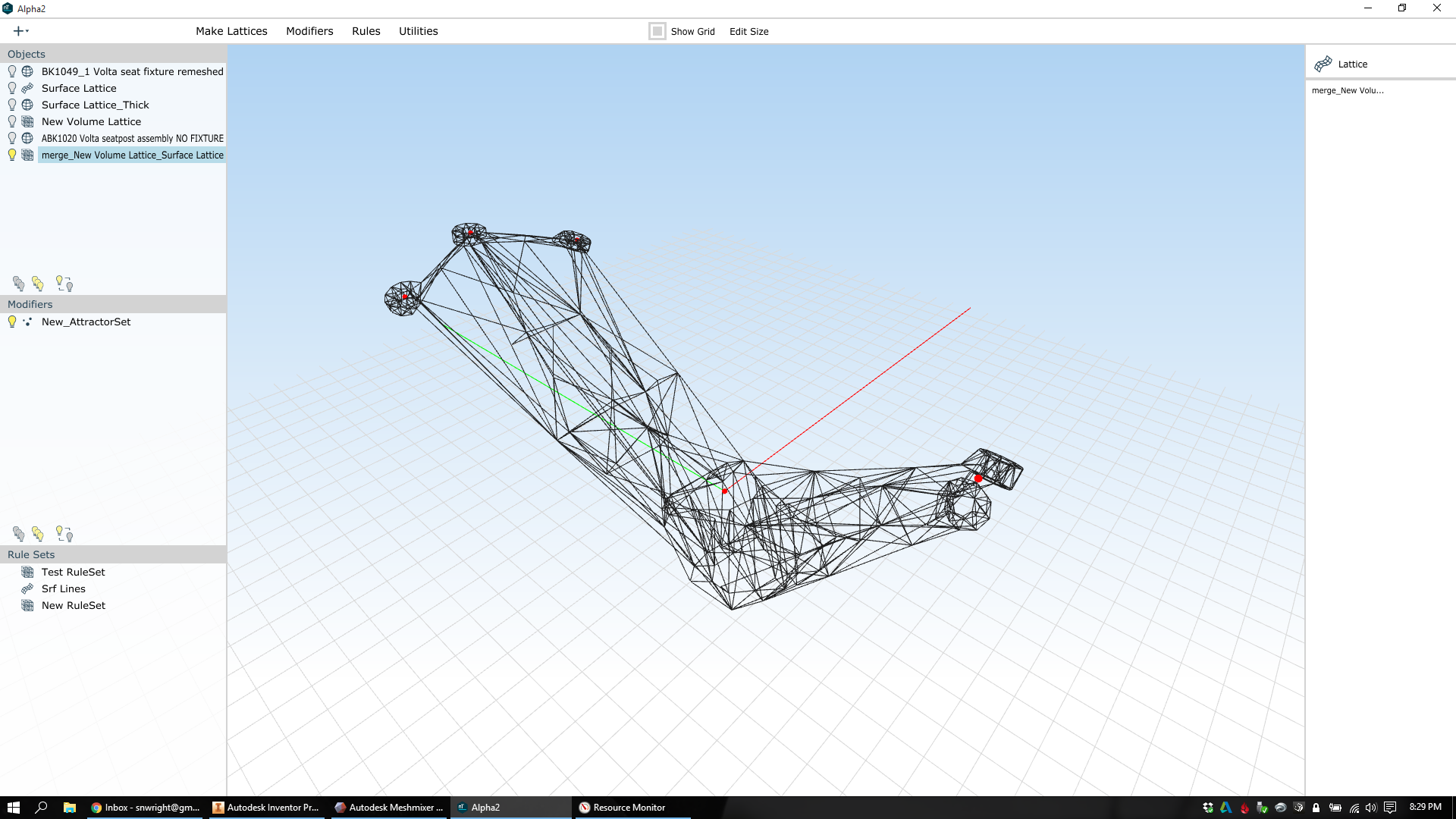

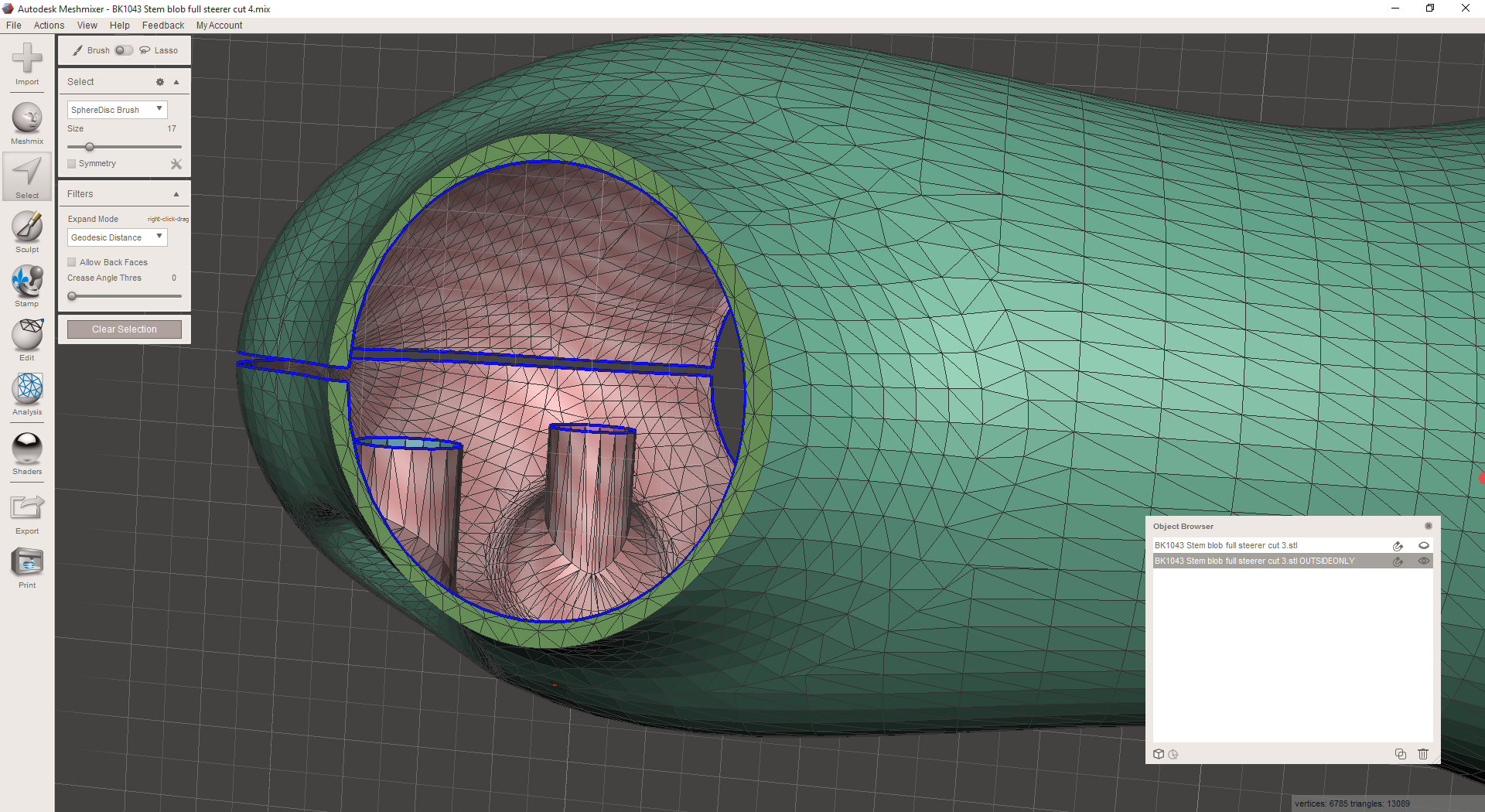

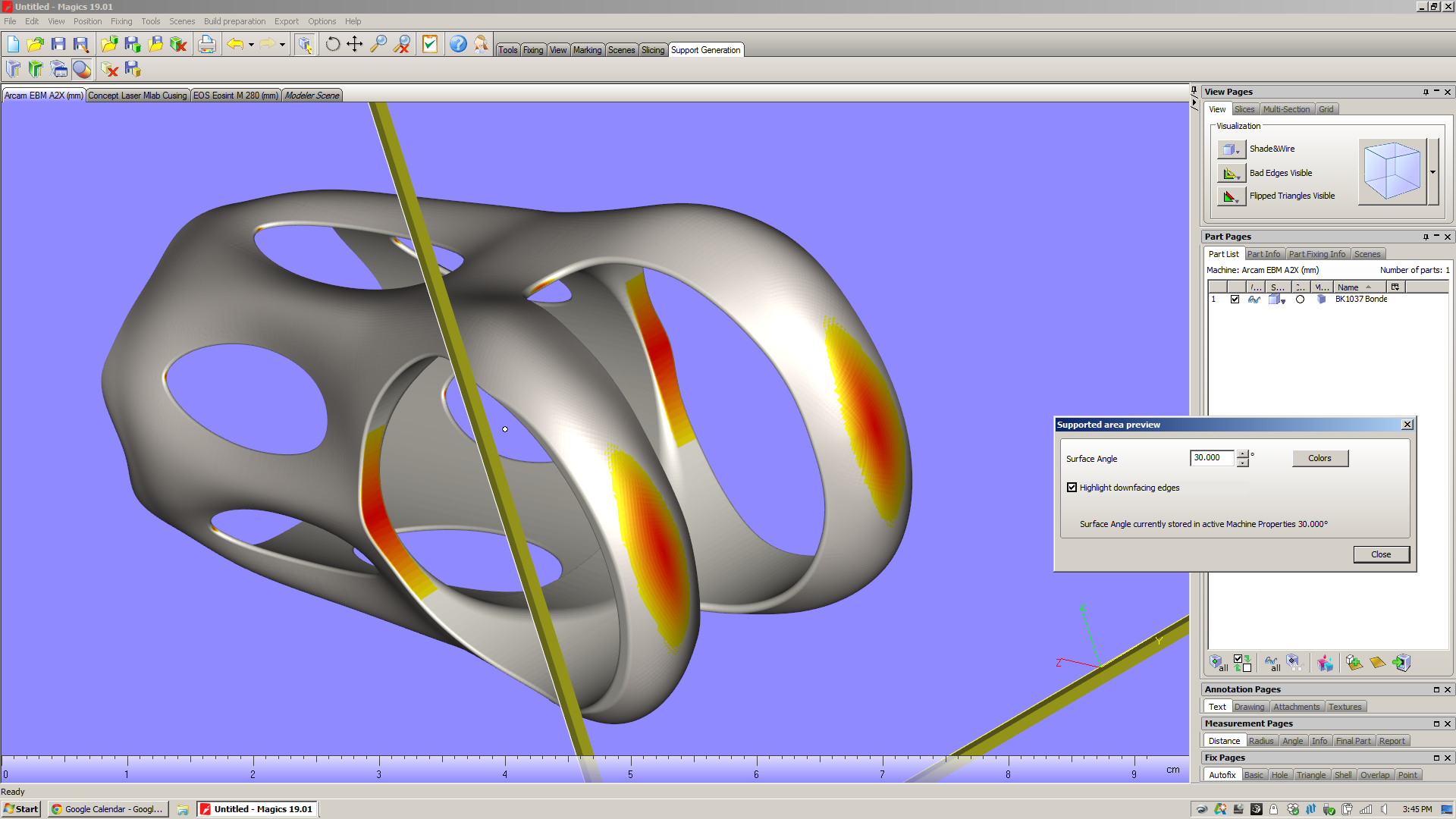

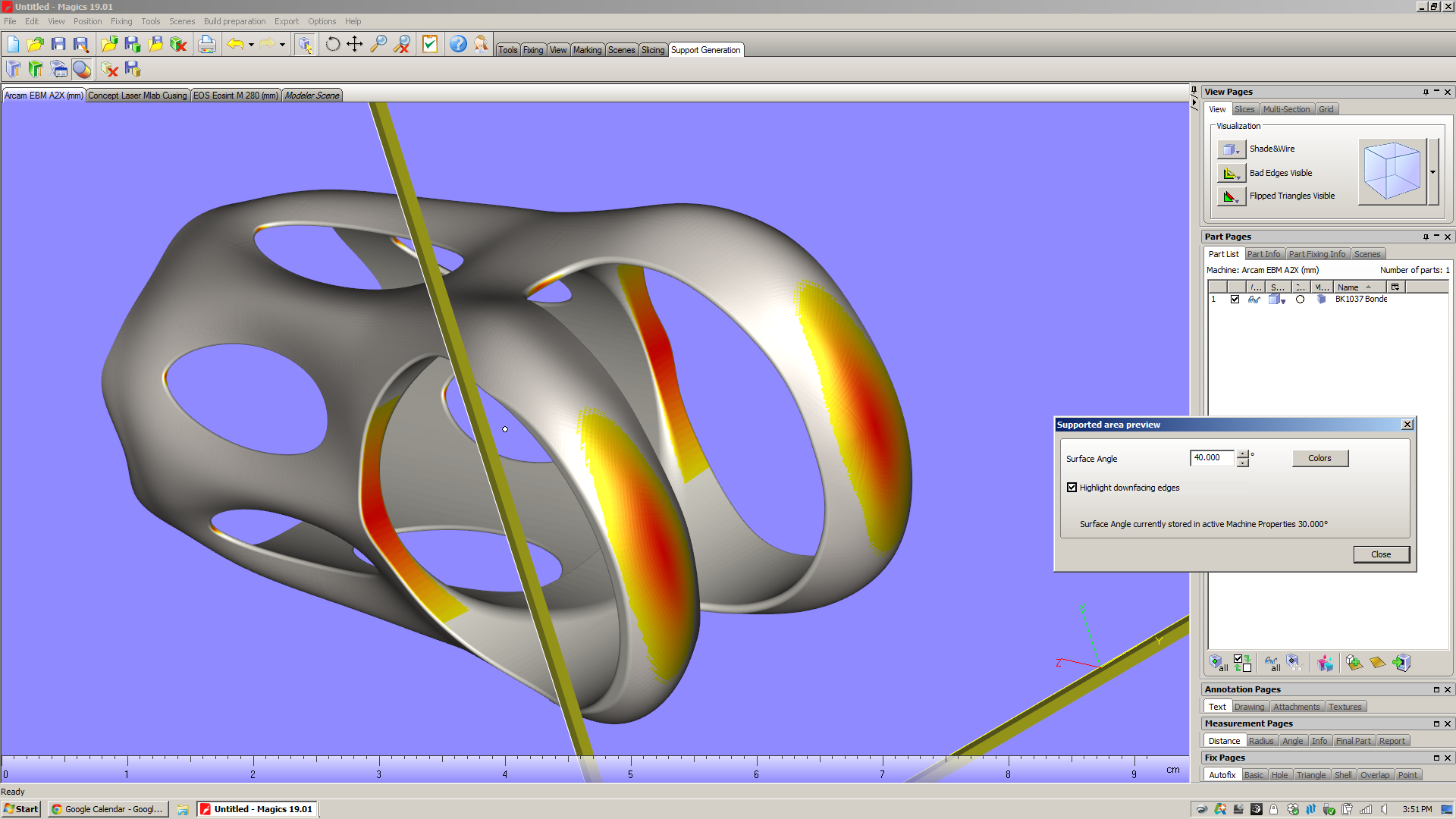

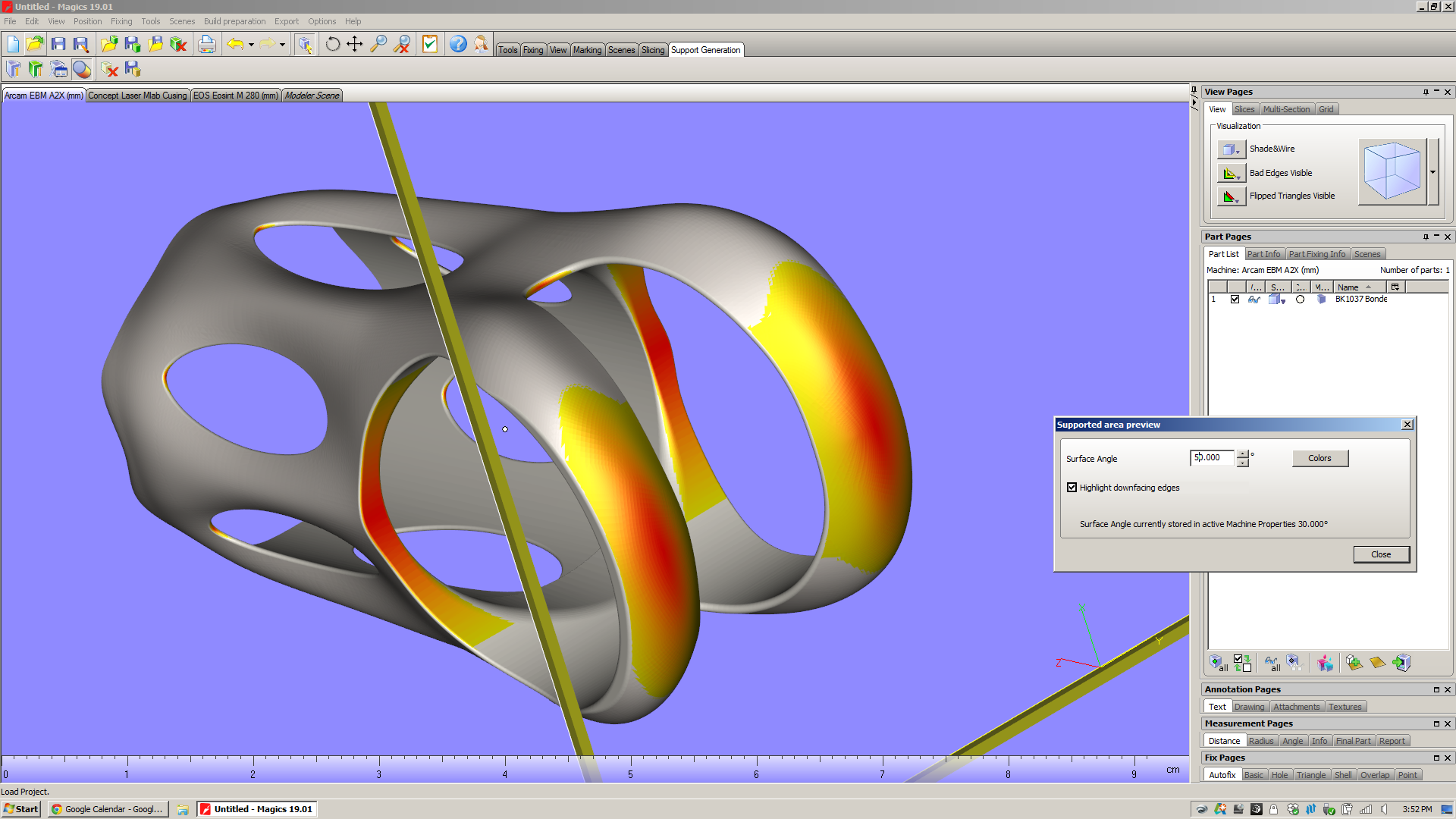

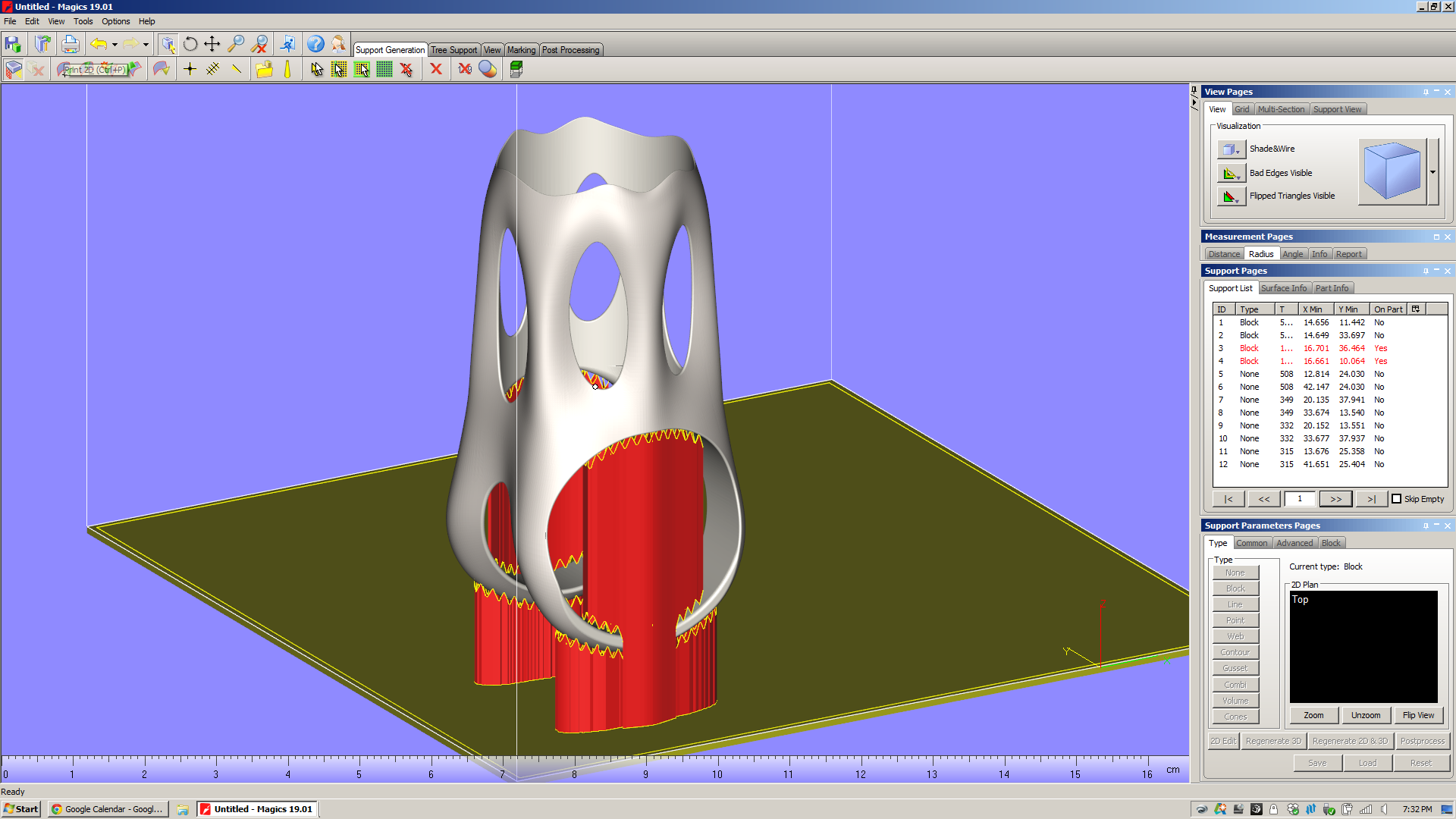

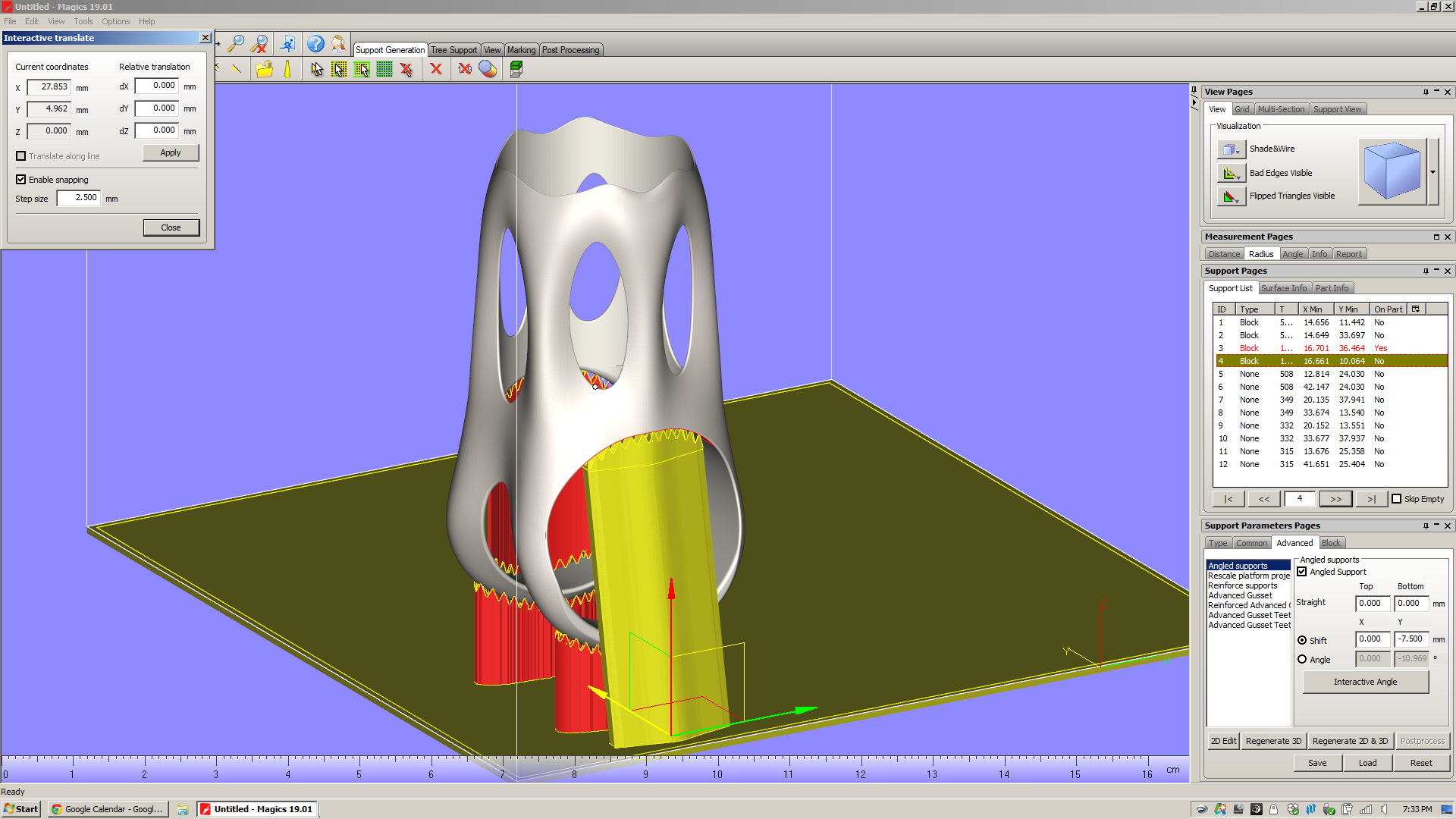

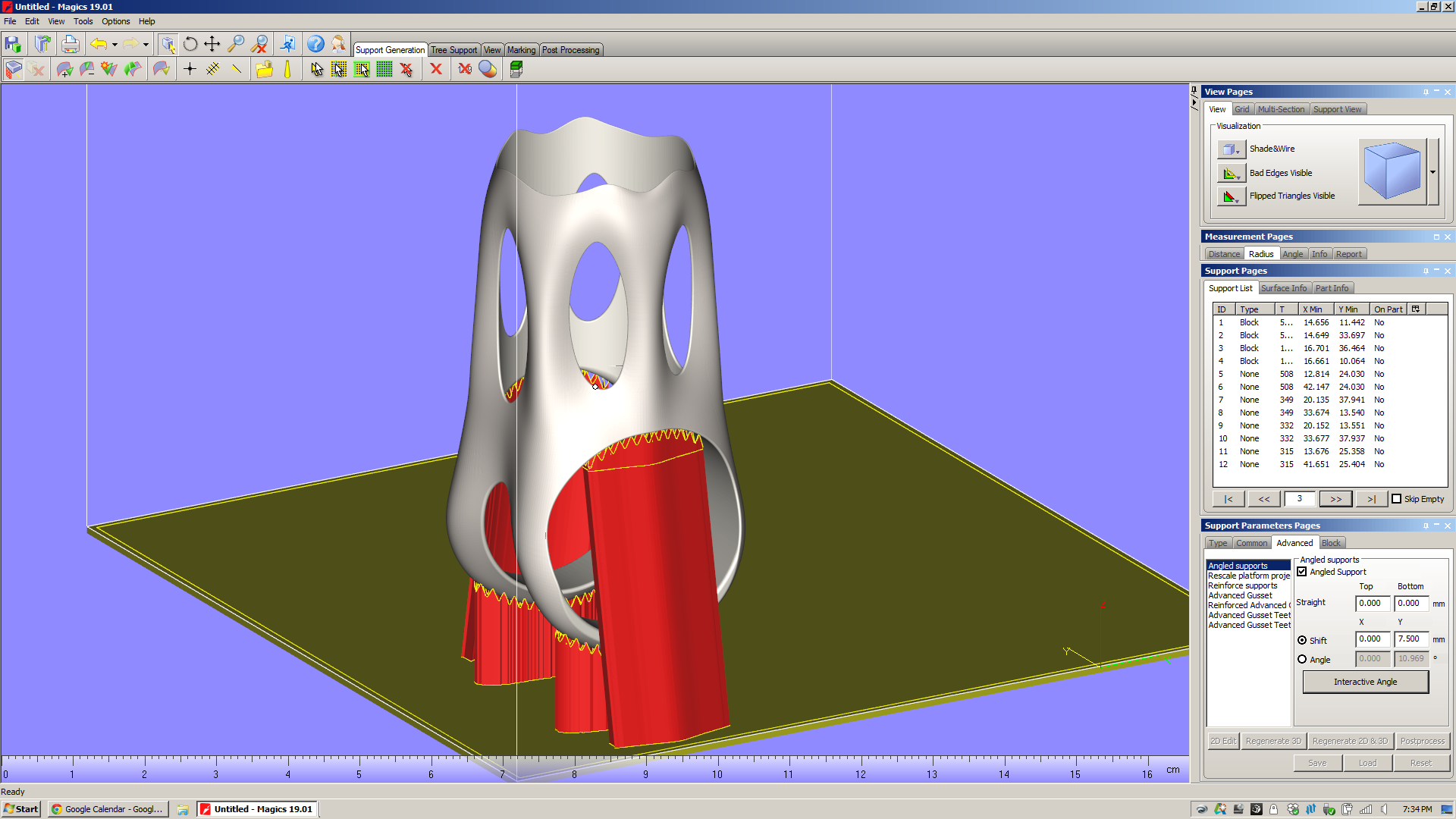

This is something I've touched on in previous posts, but it struck me again when I was in the "supported area preview" screen. It's *very* likely that I could, with a relatively small amount of work, edit the underlying geometry in order to reduce the number of supports needed significantly. But I'm not aware of a way of showing downfacing regions in solid modeling software (Solidworks/Inventor, etc), and it's rather cumbersome to bounce back and forth between Magics and Inventor to try to optimize the design for additive.

All across the industry today, I hear people talk about design software that understands the intent of the designer, and responds to accommodate it. This may be feasible in the near future, but the bottom line is that Magics (as it stands now) is *not* part of that process chain. Once a designer transitions from parametric modeling to surface tessellations, all of the geometry data is lost. If manufacturability feedback (like the supported area preview screen) is provided in software that reads surface tessellations (as Magics does), then going back to edit the underlying parametric model is *always* going to be cumbersome - and necessary.

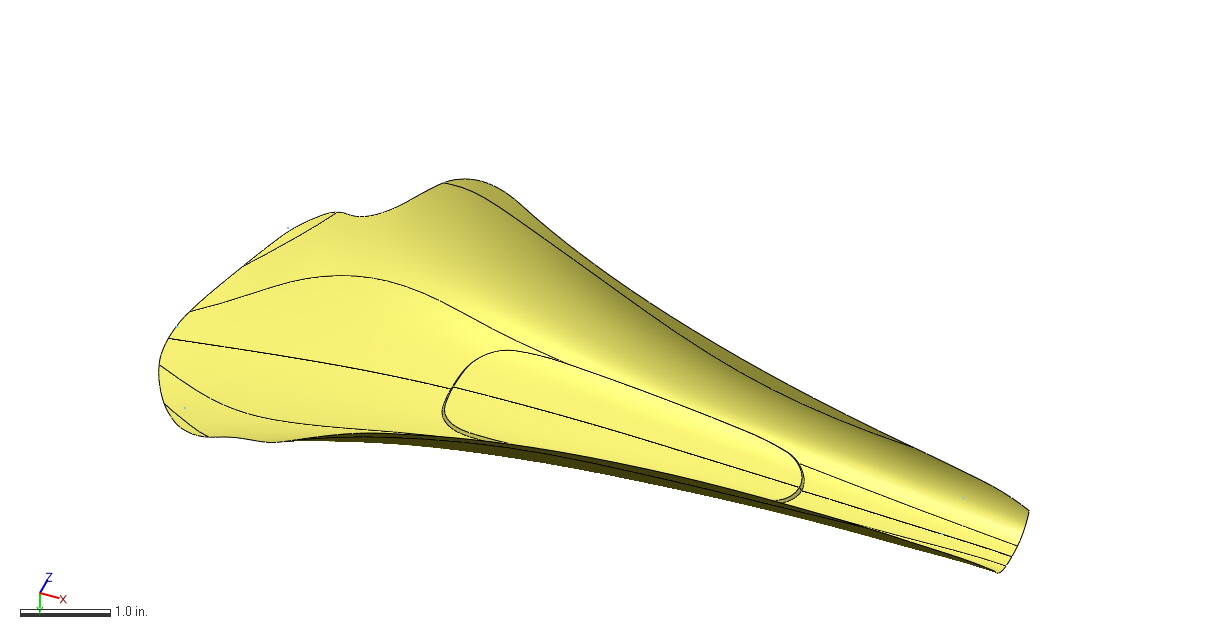

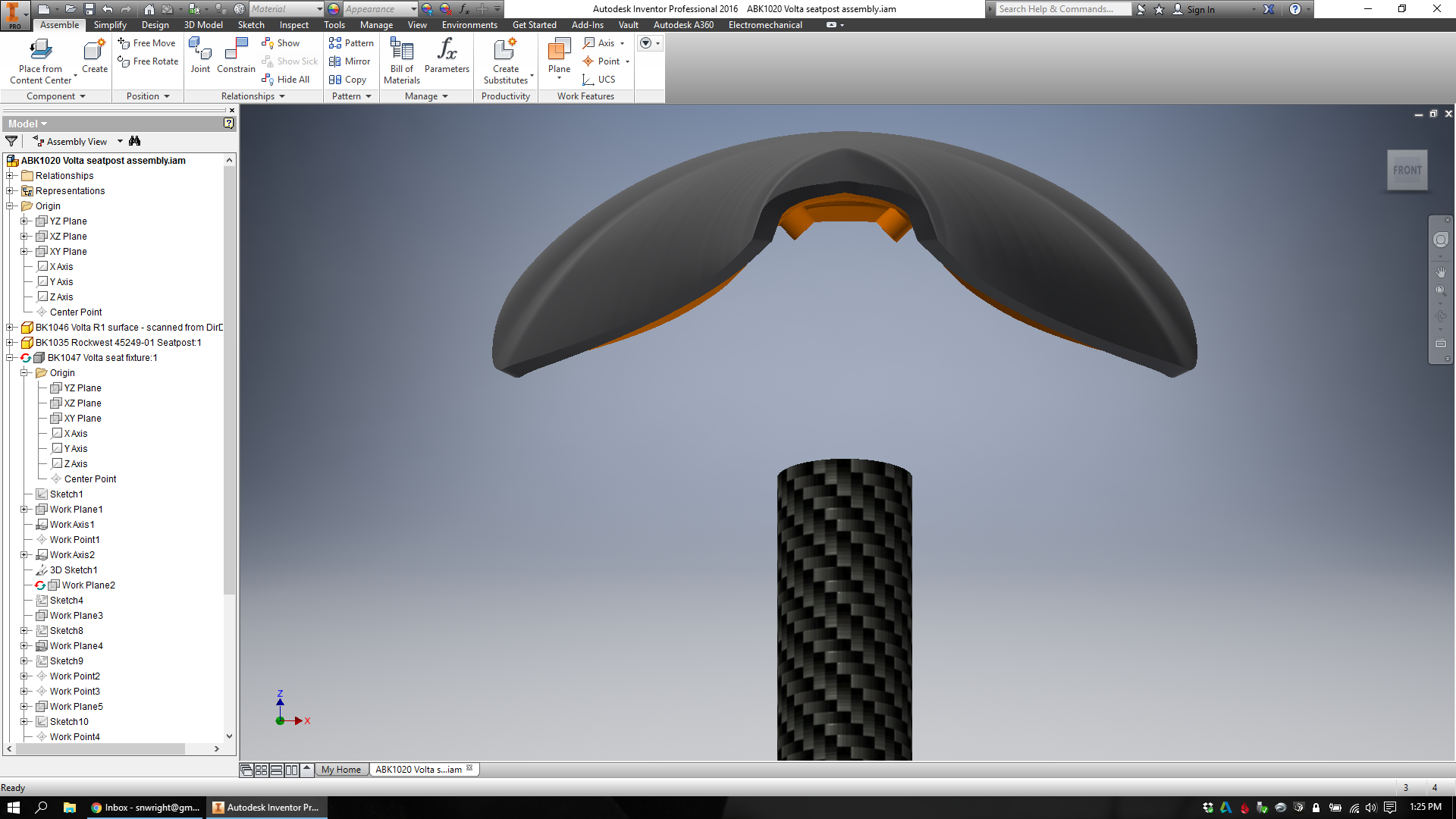

Understand/display surface quality issues due to orientation

In all additive processes that I'm aware of, surface finish will vary significantly depending on the orientation of a surface relative to the build direction. Given the layer thickness of the printed part, this is relatively straightforward to simulate - not to a high degree of precision, but with a good amount of accuracy, at least. Magics doesn't do this, and it leaves me feeling like I'm missing a key piece of information about the printed part. Sure, I can imagine what the part will look like if I just think about it for a minute, but it does strike me that having some indication of areas with high stepover (which will occur wherever a surface is oriented close to the XY plane) would be really helpful - and not particularly hard to implement (caveat: everything I said above about feature creep, etc).

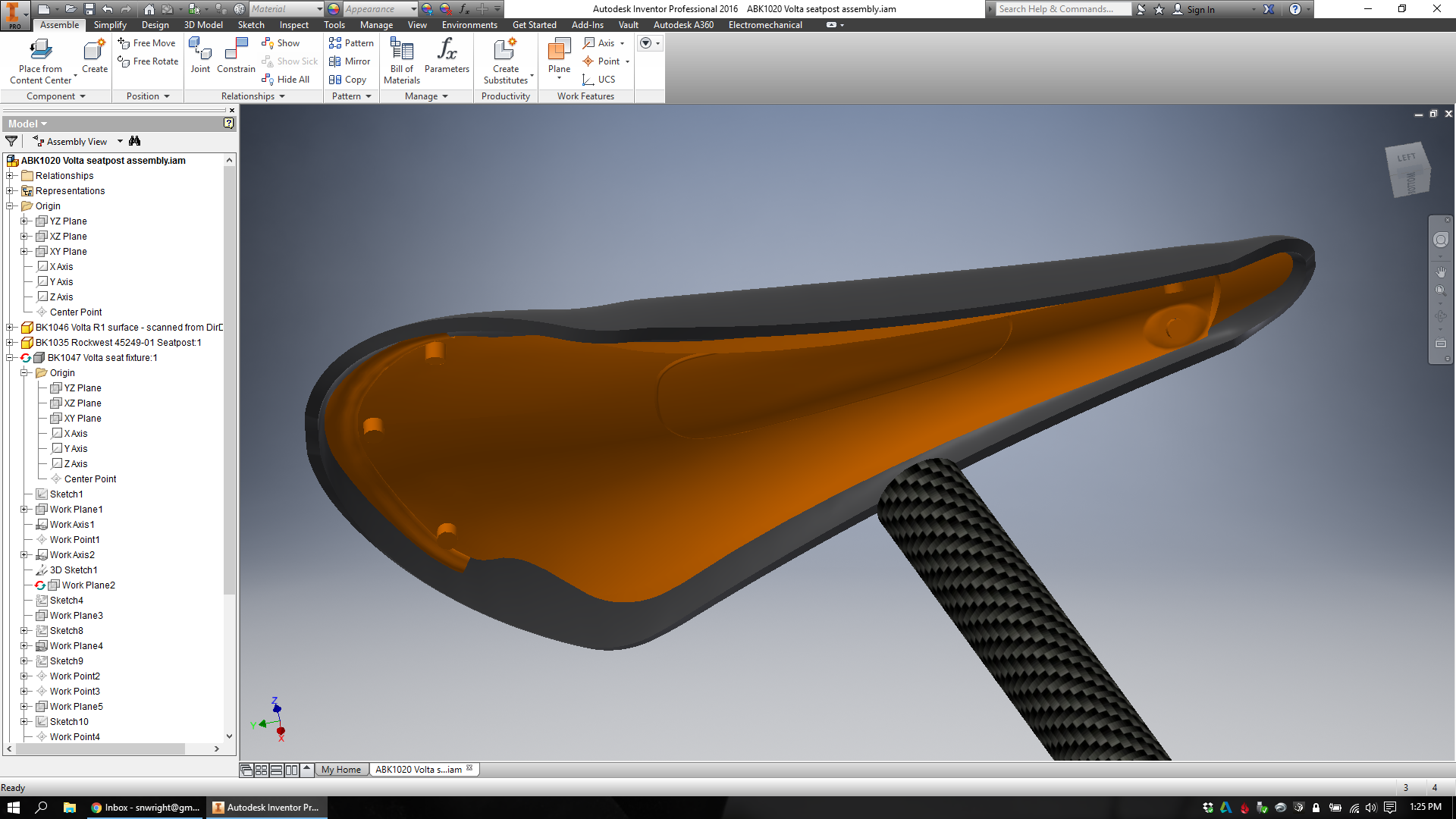

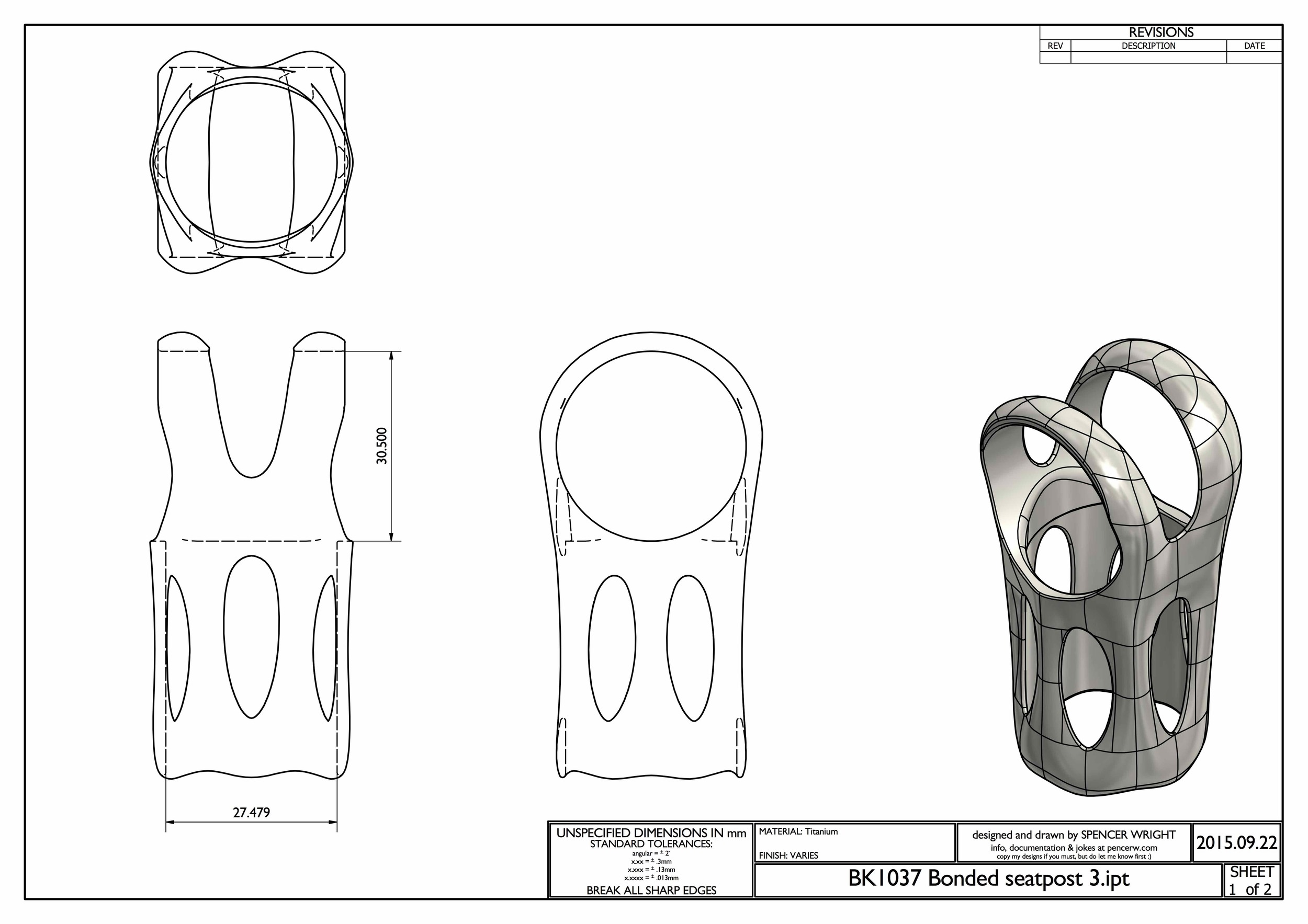

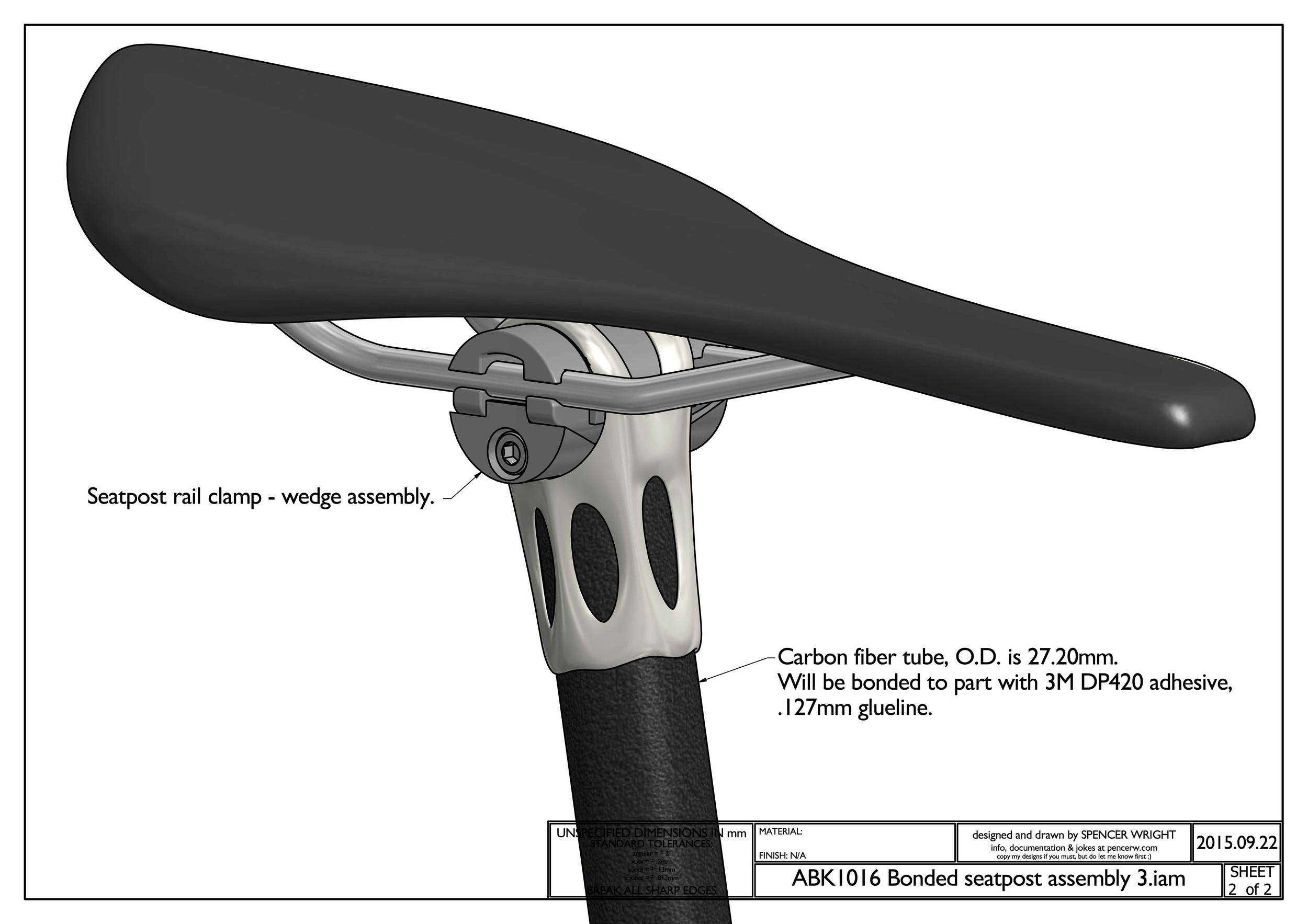

Understand the place of additive in the process chain

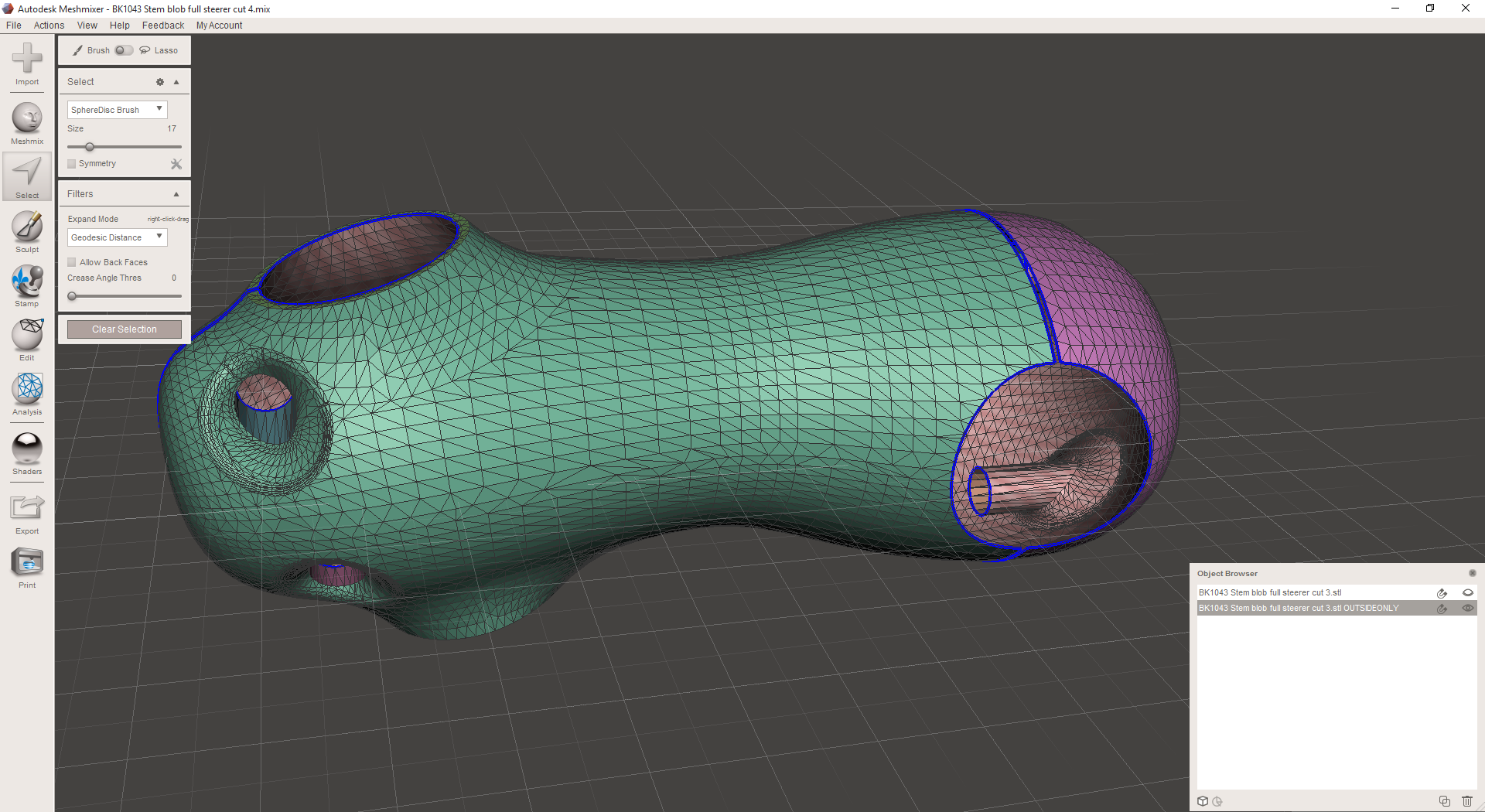

This may seem like I'm splitting hairs, but I think it's worth reiterating: Magics bills itself as "The link between your CAD file and the printed part." It is NOT concerned with the end product, which in almost all cases will have additional (subtractive) processes performed on it.

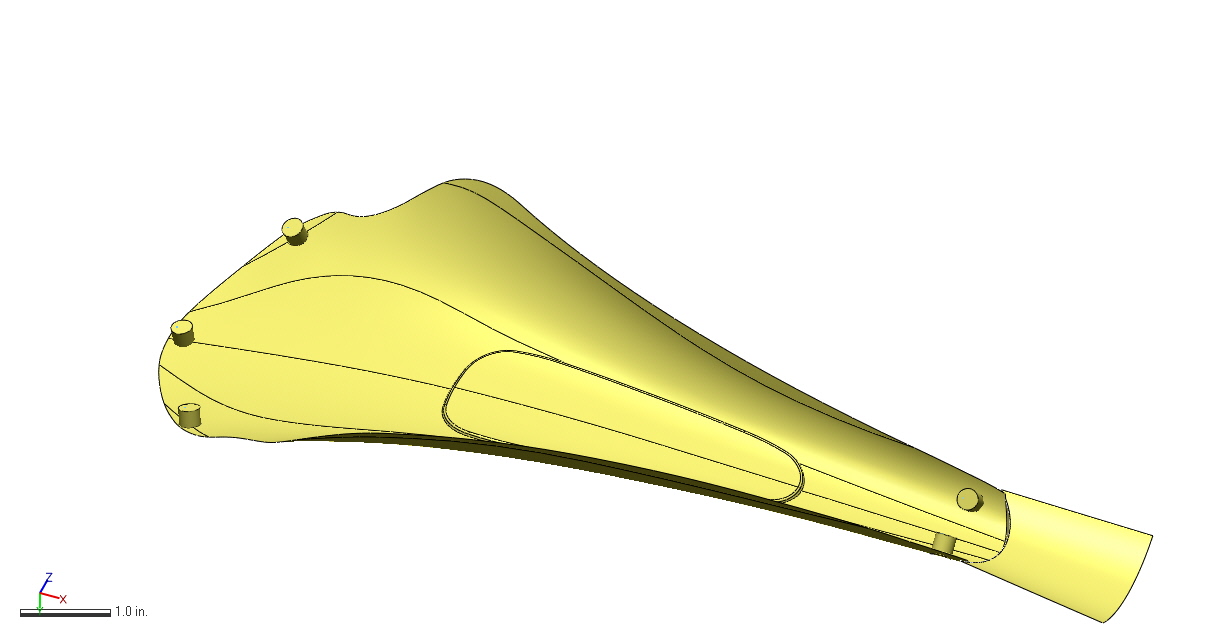

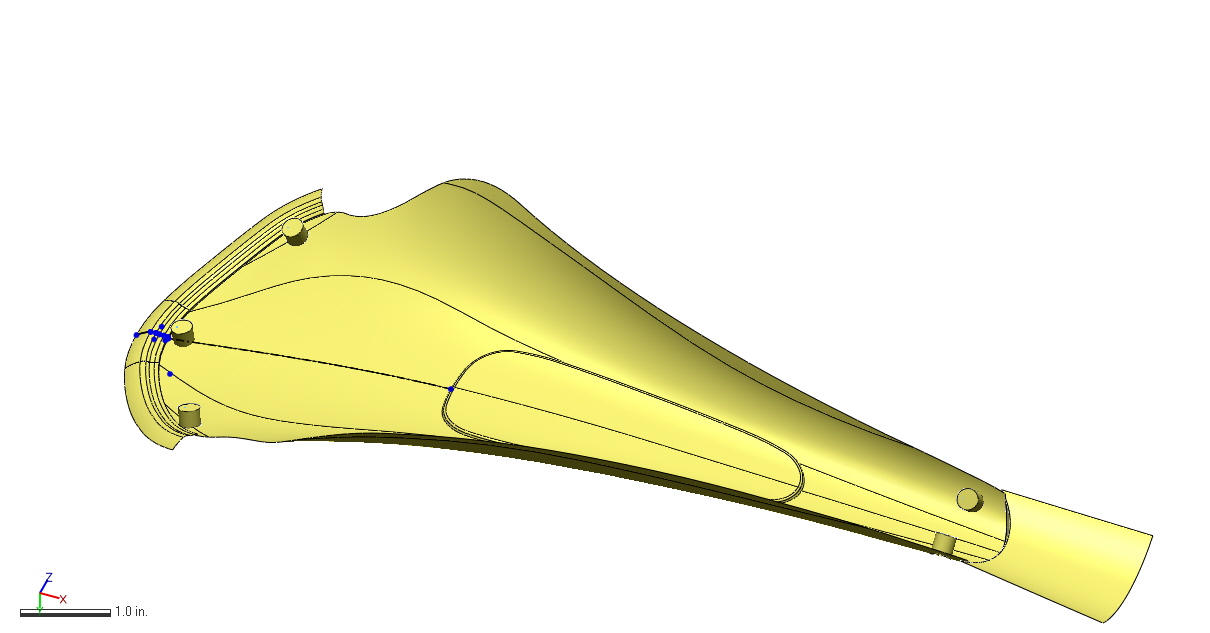

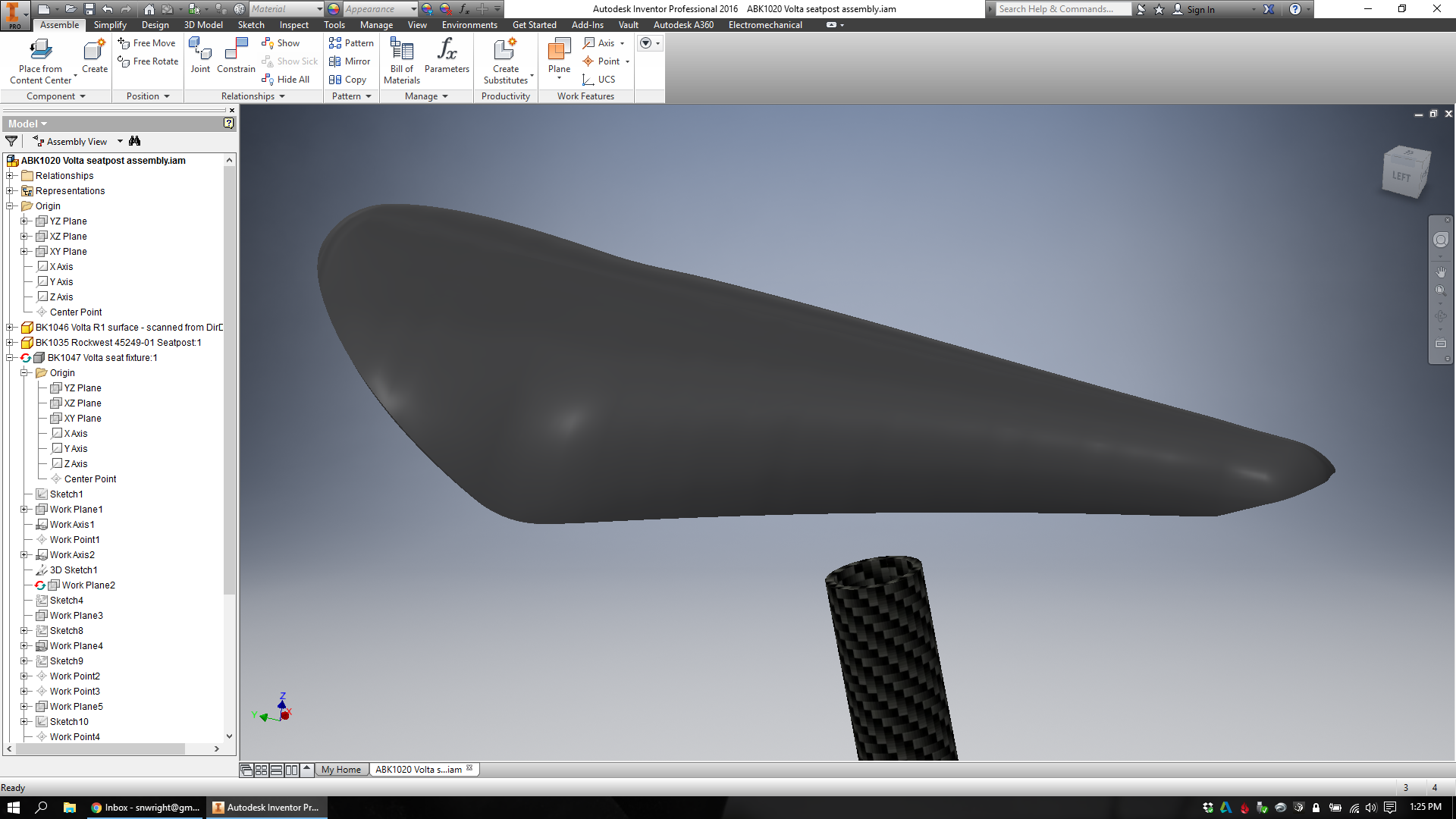

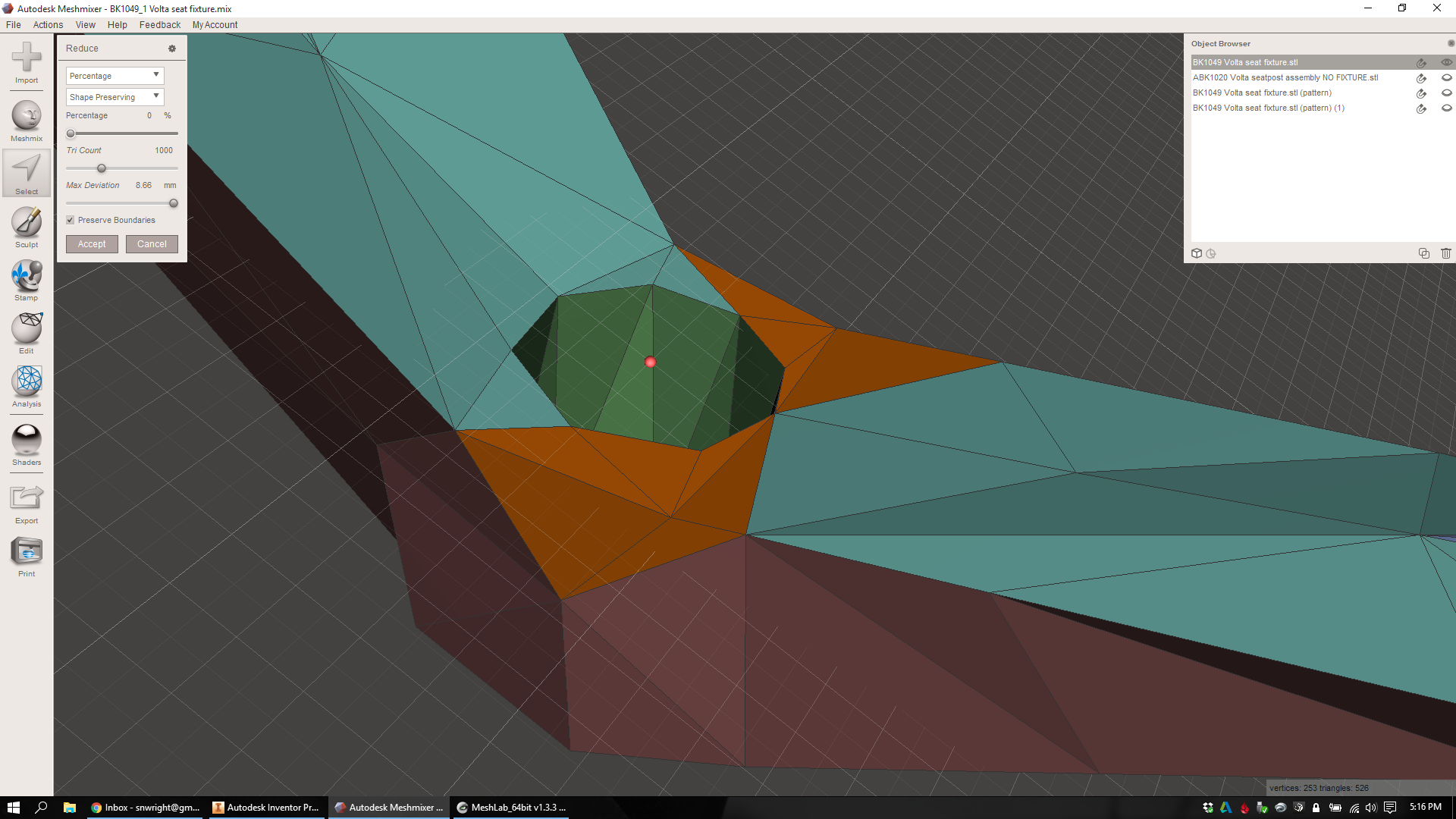

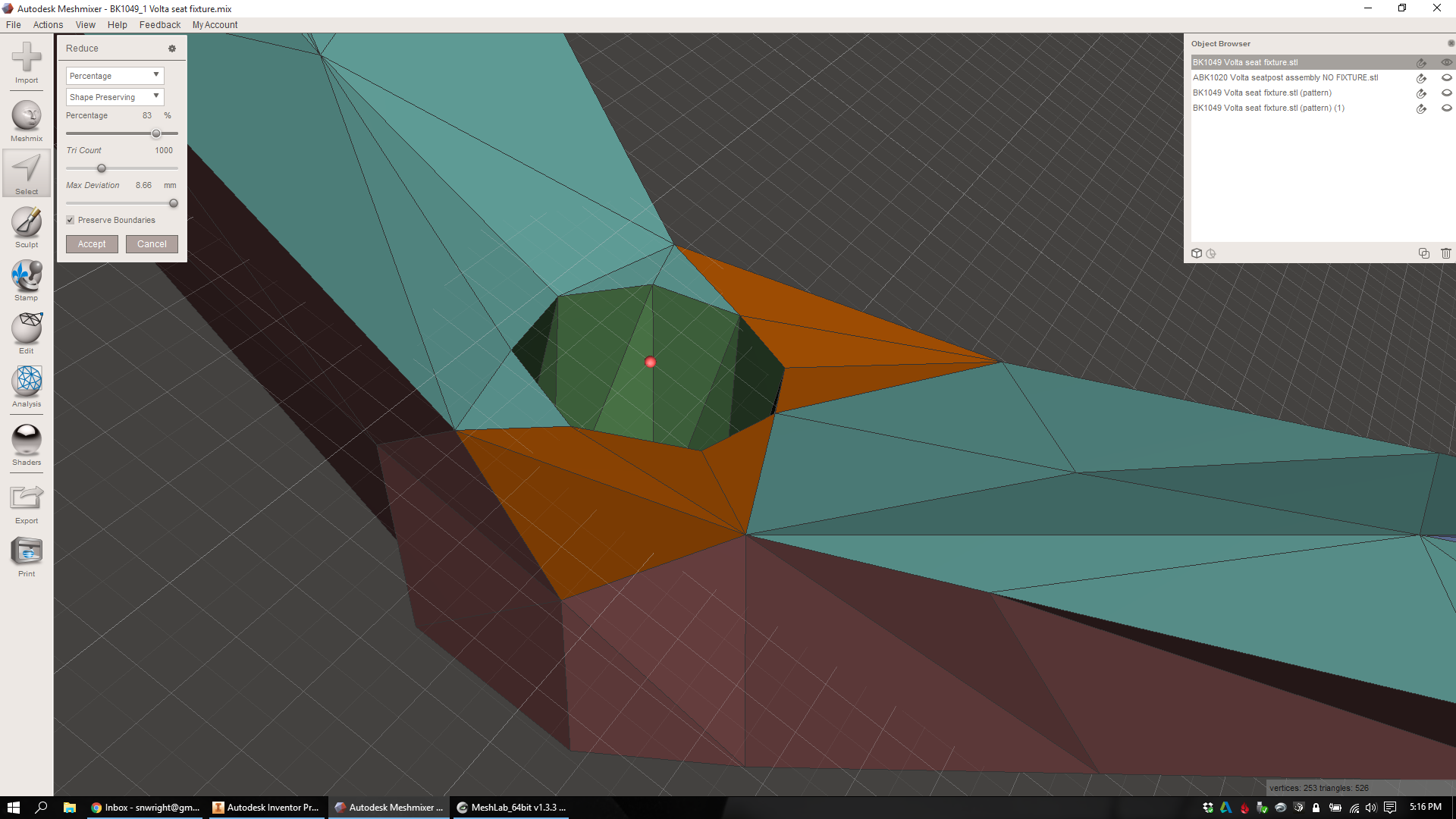

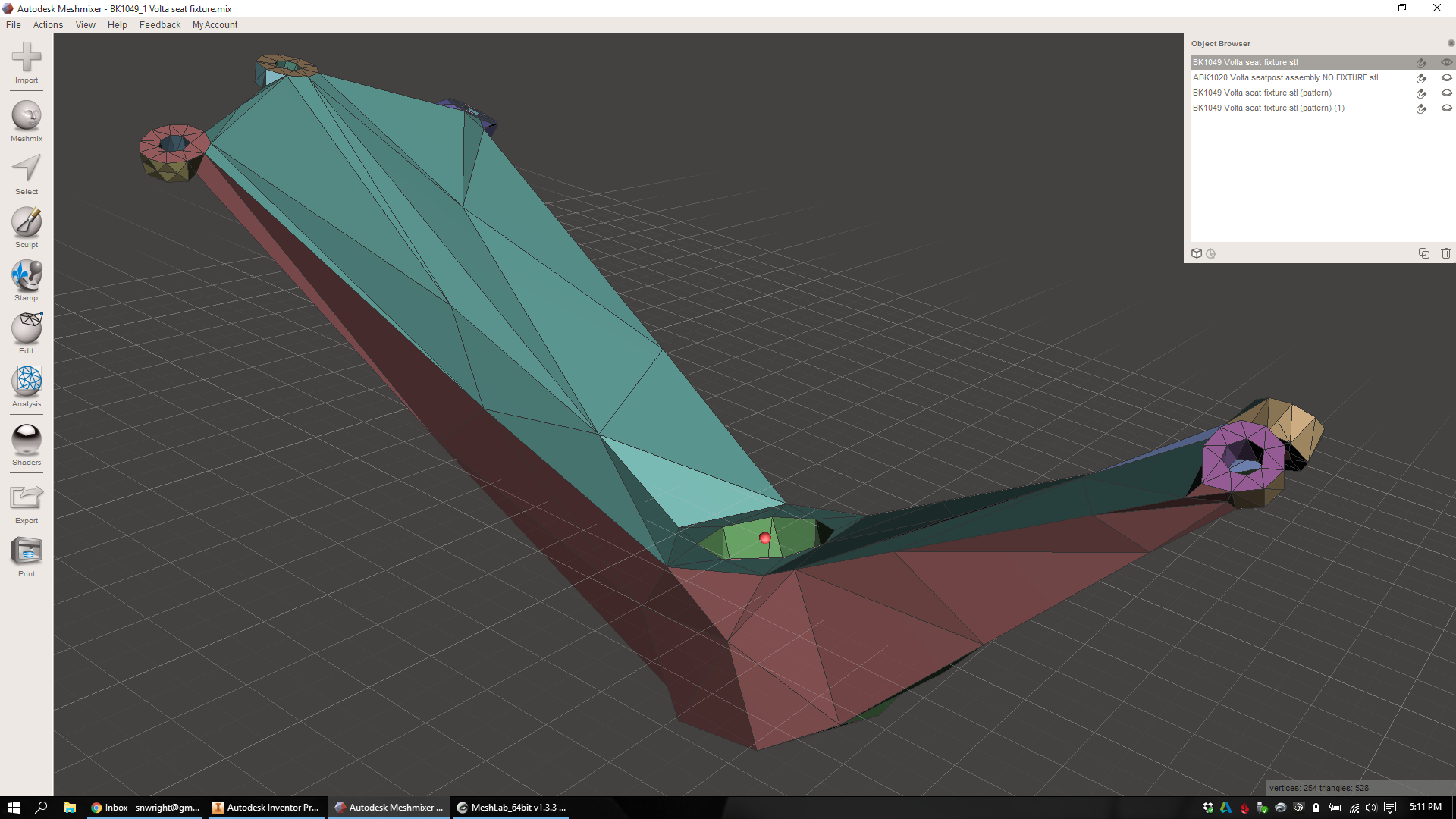

Why does this matter? When I had this part EBM printed recently, both the saddle clamp cylinder and the seatpost cylinder came out undersized. I know now that one of two things needs to happen there: either I need to compensate for the printing process in the underlying model (by making the designed dimension larger than I actually want it to be), or I need to remove material from the as-printed part (by machining, grinding, or similar).

Magics doesn't know any of this. If it did, it might be able to give me intelligent advice on what surfaces to take extra care with - and which I should ignore, as they'll be machined away in the end regardless.

In the end, Magics is a piece of CAM software - but it only deals with one step in the production chain. Changing this is a monstrous, complex task, but it's one whose impact will be hugely positive.

So

Magics is pretty cool - it does a *ton* of really useful stuff. You'll note, also, that I'm basically not interested at all in its "fix" feature, which (I'm told) is used a lot with models that come out of Rhino.

But it's also representative of a lot of what's going on in industrial additive manufacturing today. This isn't Materialise's fault; it's just the way things evolved, and is the result of (I'm sure) a lot of collaboration, competition, and plain old hustling (all of which I fully support) in the industry over the past few decades.

Regardless, Magics is a place where you can see a lot of the implicit assumptions that industrial additive manufacturing has been built upon. More on this soon.