Recently a friend sent me a link to a blog post titled CAD Is a Lie: Generative Design to the Rescue. In it, Autodesk’s Jeff Kowalski touches on many of the points I made in Computer aided design, and in some cases takes a position that opposes mine. About Dreamcatcher's design process, he writes:

This is the computer becoming creative and able to generate ideas that people help to develop. Beyond creativity, the sea change is the computer’s ability to learn.

I’ve spent a lot of time over the past month thinking about these two sentences, and I still don’t know what to make of them. Allow me to work through a few thoughts.

Creativity and autonomy

As I've written here before, I'm excited for CAD software to be increasingly embedded with knowledge about how designs relate to the real world (there are some early ideas here, and here, and here, etc). But I wouldn't say that I was looking for creativity per se - and I'm skeptical that Kowalski is either.

Kowalski describes a process in which "you describe your well-stated problem, and then, using generative methods, the computer creates a large set of potential solutions." This is a fine idea in and of itself, but it doesn't have anything to do with creativity - and to confuse the two would be a fundamental mistake.

Creativity is the process of coming up with original ideas, and a huge part of creativity is the ability to recontextualize - and often times reject outright - the problem that you've been assigned. But if I were to give my computer a "well-stated problem," then it would be counterproductive to allow it to answer creatively. Creativity is in direct opposition to well-stated problems, which are meant to be solved directly - not usurped.

In Environmental humanism through robots, Nicholas Negroponte describes these limitations - and offers a vision for what a computer-as-design-partner would need to look like:

What probably distinguishes a talented, competent designer is his ability both to provide [designs] and to provide for missing information. Any environmental design task is characterized by an astounding amount of unavailable or undeterminable information. Part of the design process is, in effect, the procurement of this information. Some is gathered by doing research in the preliminary design stages. Some is obtained through experience, overlaying and applying a seasoned wisdom. Other chunks of information are gained through prediction, induction and guesswork. Finally, some information is handled randomly, playfully, whimsically, personally.

It is reasonable to assume that the presence of machines, of automation in general, will provide for some of the omitted and difficult-to-acquire information. However, it would appear foolish to suppose that, when machines know how to design, there will be no missing information or that a single designer can give the machine all that it needs. Consequently, we need robots that can work with missing information. To do this, they must understand our metaphors, must solicit information on their own, must acquire experiences, must talk to a wide variety of people, must improve over time, and must be intelligent. They must recognize context, particularly changes in goals and changes in meaning brought about by changes in context.

Here Negroponte is saying that Artificial General Intelligence (which itself is no small feat; for an overview of AGI and what it would actually mean in practice, see this fantastic Wait But Why post) is a prerequisite for true Computer Aided Design. He's also saying that once we develop true CAD systems, we'll have to abandon the command-and-control attitude that we have towards today's robot underlings: Essentially, that we'll need to accept their refusal to solve our well-stated problems at all.

Human machine interfaces

Kowalski also overstates our ability to define well-stated problems in the first place. As I wrote in Computer aided design, "optimization software has only just begun the task of rethinking how engineers tell their computers what kind of decisions they need them to make." As David Mindell describes in Our Robots, Ourselves, commercial aviation is much farther ahead on this point (emphasis mine):

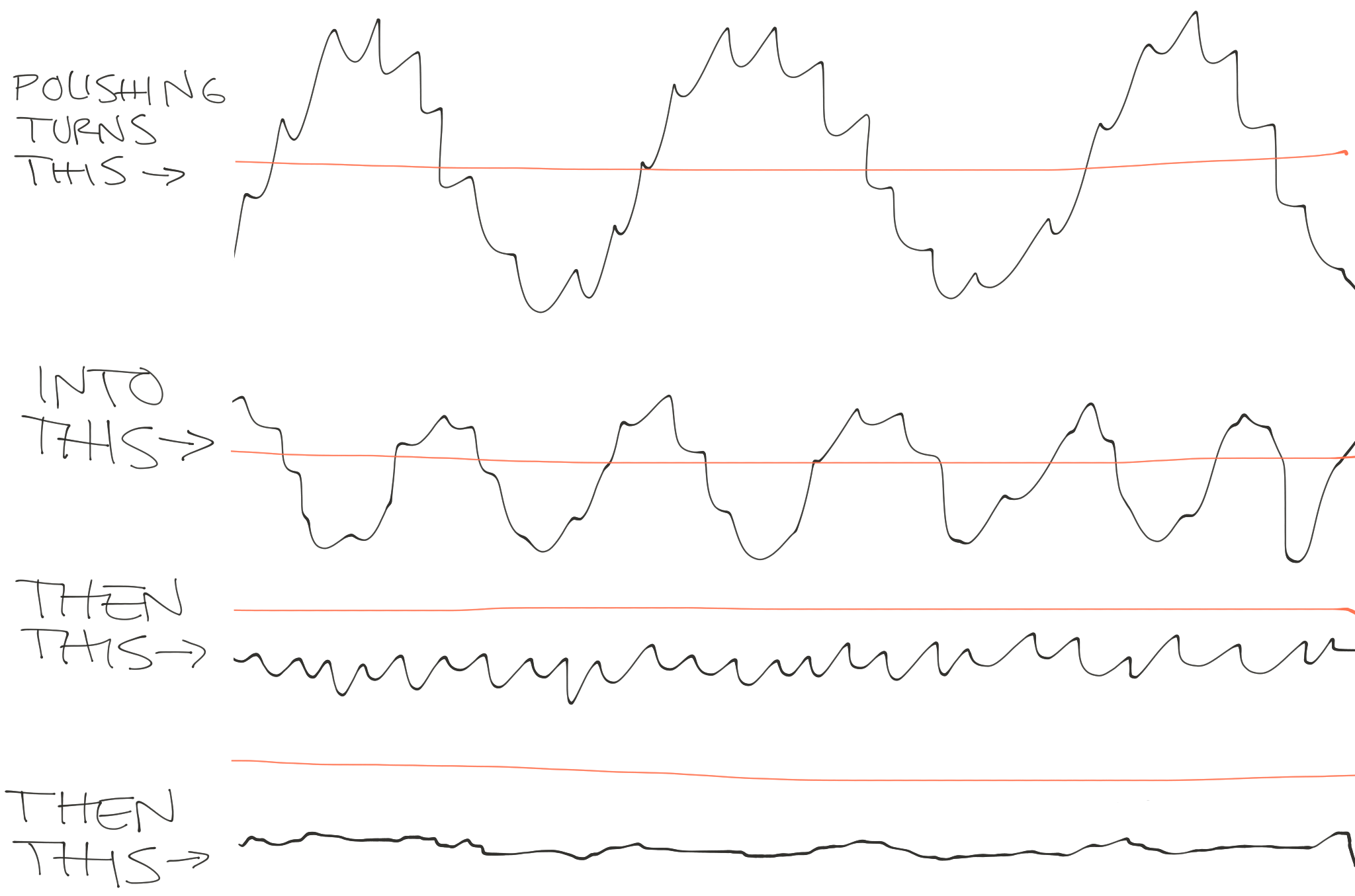

[Heads up displays] represent a new approach to the problem: an innovation which, while certainly “high tech,” is an innovation in the humans’ role in the system. Rather than sitting back and monitoring, the pilots are actively involved. Again, sometimes more automated is actually a less sophisticated solution...as we look for solutions to automation problems that arise in aviation and other domains, we should seek innovations in how we combine people and machines, rather than simply adding additional equipment and software. Some of these innovations have been called “information automation,” which provides data in new forms to human pilots, as opposed to “control automation,” which actually flies the aircraft for them.

With the advent of automation, pilots needed new ways of communicating with their airplanes. Heads up displays, and their abstraction from "pitch and power" to "flight path and energy," provided that. If we're to move beyond the age of computer aided documentation, engineers will need new methods of communicating design intent. What will that look like?

As Bill Mitchell (again, via David Mindell's book) said of autonomous cars, "The dashboard should be an interface to the city, not an interface to the engine.” As I wrote in Directions in automation adoption, some areas of robotics are already heading this way. BMW would never ask their Kuka robots to automatically generate optimal toolpaths; that would be unnecessarily complex to engineer, and the result would lack a critical dose of judgement and flexibility. Instead, they ask that their bots complete a straightforward task: follow a toolpath that a human operator defines manually.

In BMW's case, the human is never really "out of the loop" - something I suspect is important in human-machine collaboration. Again I'll quote Mindell's book, this time writing now about space travel:

Nearly fifty years ago, when Neil Armstrong landed the lunar module on the moon, he had both a HUD and an autoland. The HUD was an early, passive design—a series of angle markings etched into Armstrong’s window. The onboard computer read out a number on an LED-like display, and if Armstrong positioned his head correctly he could look through that angle marked on the window. Directly behind the indicated number, then, he saw the actual spot on the moon that the computer was flying him toward.

If he didn’t like the designated area because of rocks or craters, he could give a momentary jog to the joystick in his hand and “redesignate” the landing area fore, aft, or right or left, almost like moving a cursor with a mouse to tell the computer where to land. The computer would then recalculate the trajectory it would use to guide the landing, and give him a new number for where to look. Armstrong could redesignate the landing zone as many times as he liked in a human/machine collaboration that allowed the two to converge and agree on the ideal landing zone, whence the autoland would bring the craft safely down.

But Armstrong never used the autoland. On Apollo 11, he didn’t like the spot the computer had selected for him because there was a crater, and rocks were in the way. Rather than redesignate, a few hundred feet above the moon’s surface he turned off the automated feature and landed in a less automated mode...

The space shuttle, too, had an autoland system...On every single flight, the shuttle commanders turned off the automation well before landing and hand flew the vehicle to the ground.

I asked former shuttle commanders why they didn’t use the autoland even though they had trained for it in simulators and in simulated landings in the shuttle practice aircraft. They responded that if something had gone wrong and they needed to intervene manually, it would be too difficult and disruptive to “get into the loop” so near to the landing (also, the sensitive ground equipment required to guide the autoland was not available at all of the shuttle’s backup landing sites). Given that you’ve got only one chance to land a shuttle, you might as well hand fly it in.

Like Kowalski, I genuinely want CAD software to become my "partner in exploration." Like him, I agree that the era of computer aided documentation is outdated. But whether our computers end up being creative or not, I want to communicate with them in a way that respects their abilities - and my own. And I don't think that "well stated problems" are it.

I'll close with a final thought from Mindell, this time from an interview he gave to EconTalk:

We have yet to build a system that has no human involvement. There's just human involvement displaced in space or displaced in time. Again, the coders who embed their world view and their assumptions into the machine, or any other kind of designer - every last little bracket or tire on a vehicle has the worldview of the humans who built it embedded into it. For any autonomous system, you can always find the wrapper of human activity...Otherwise the system isn't useful.

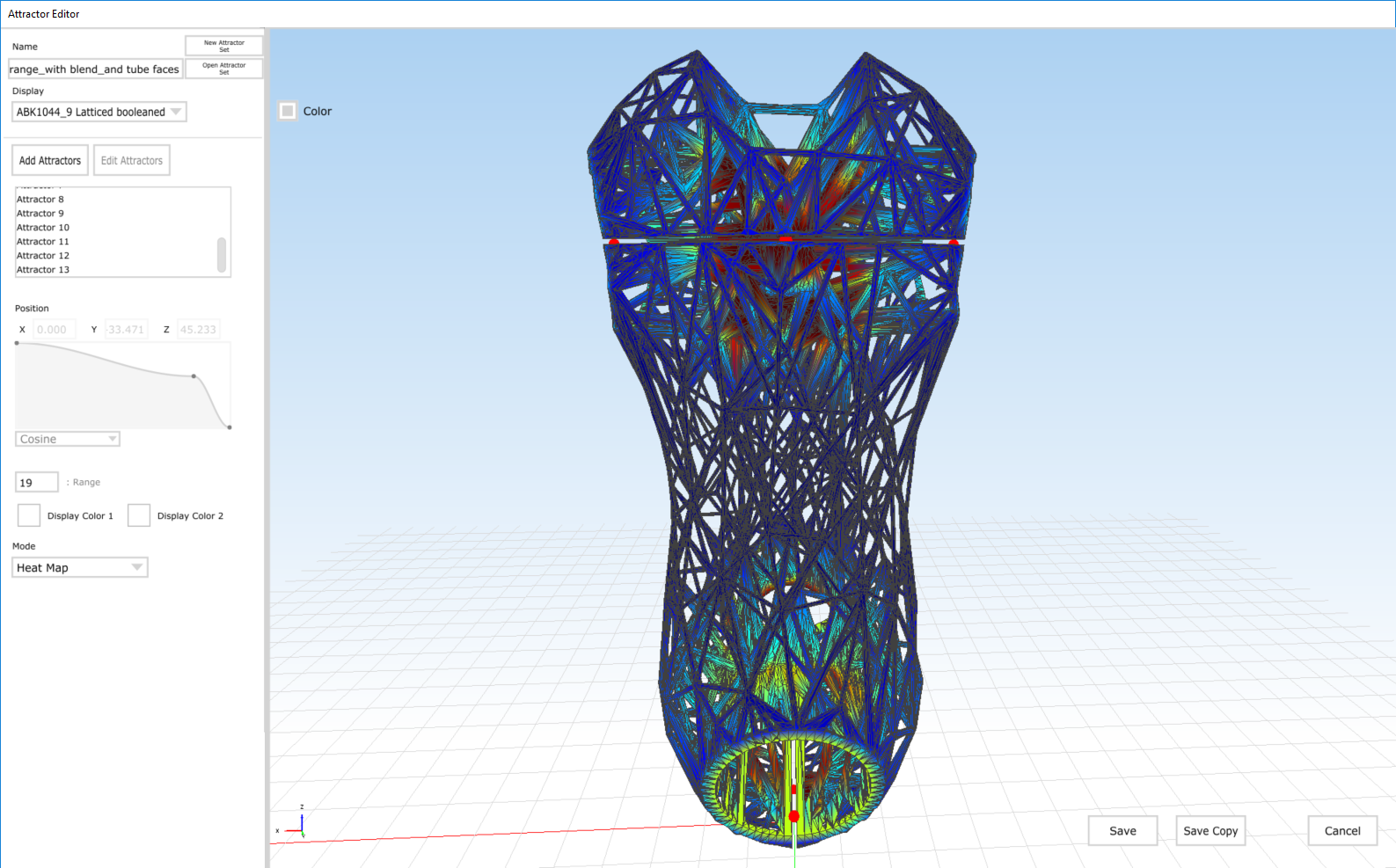

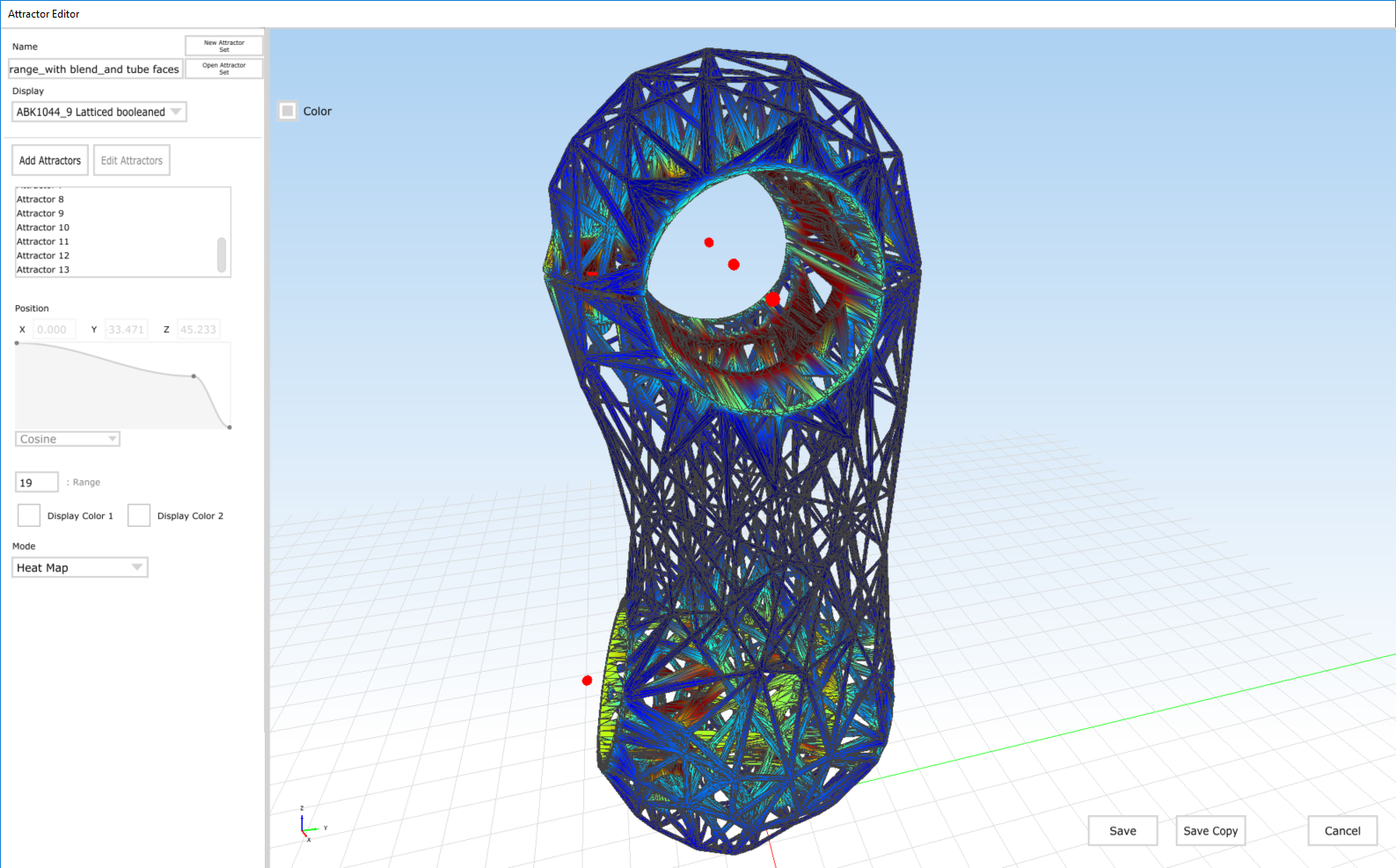

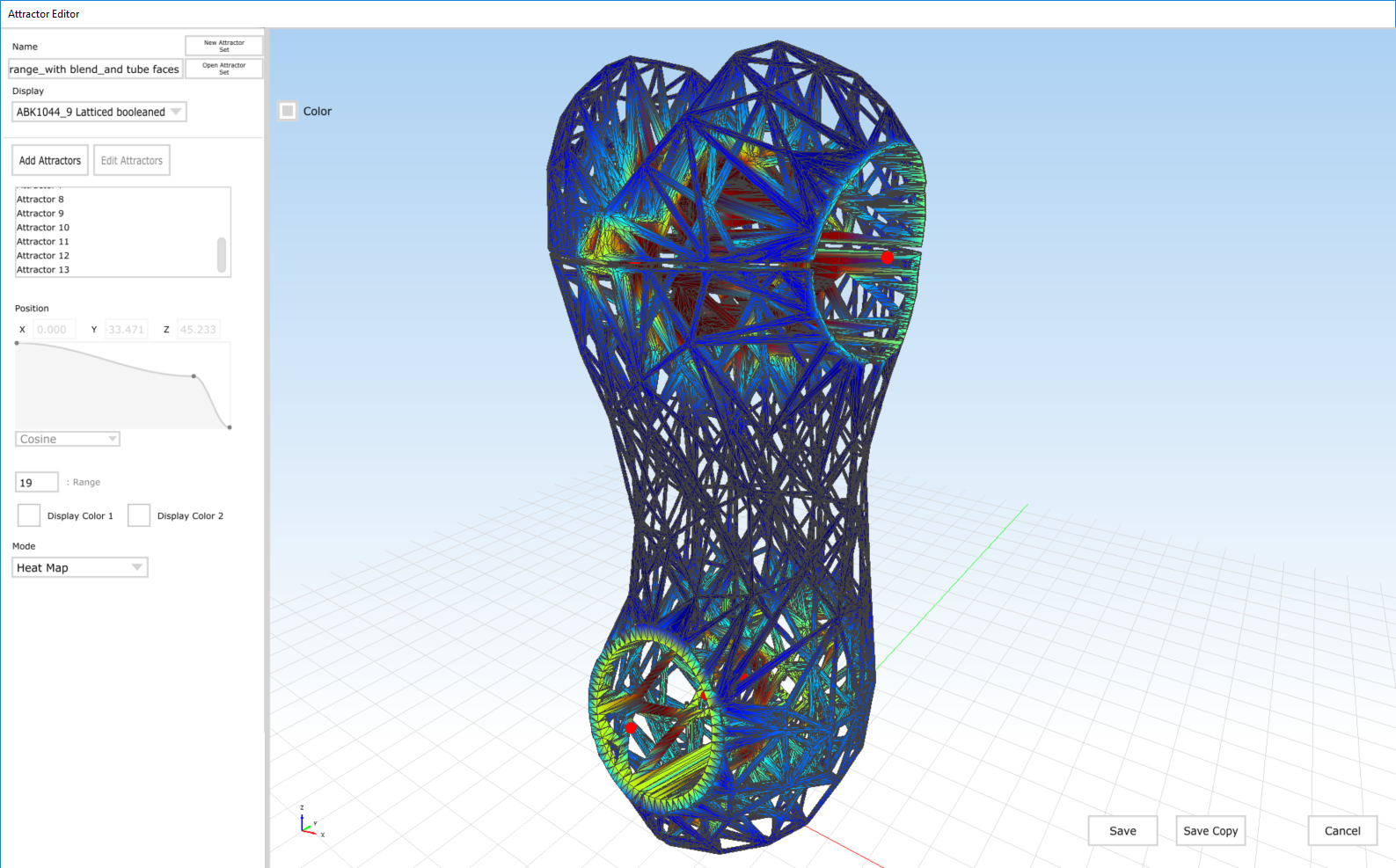

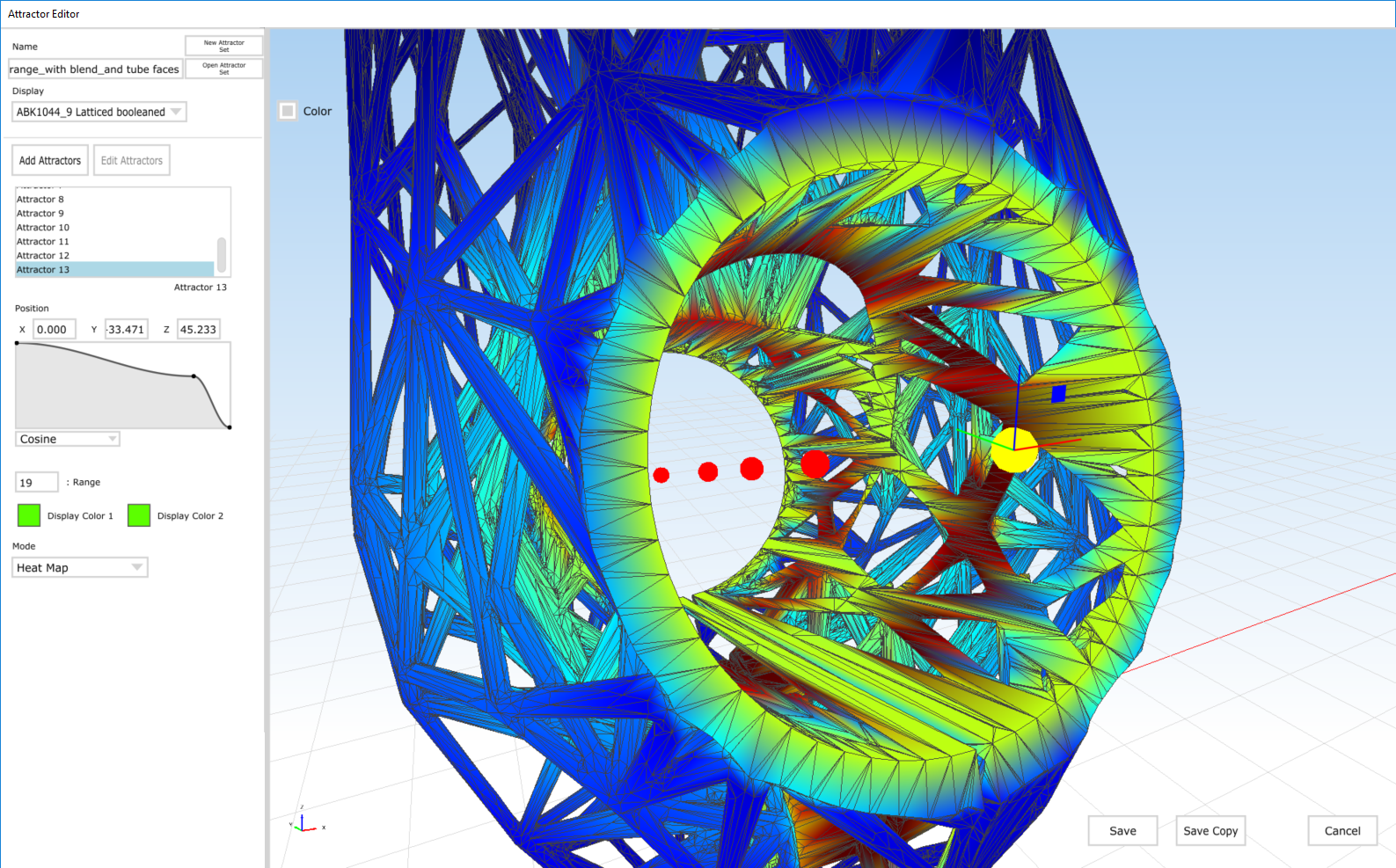

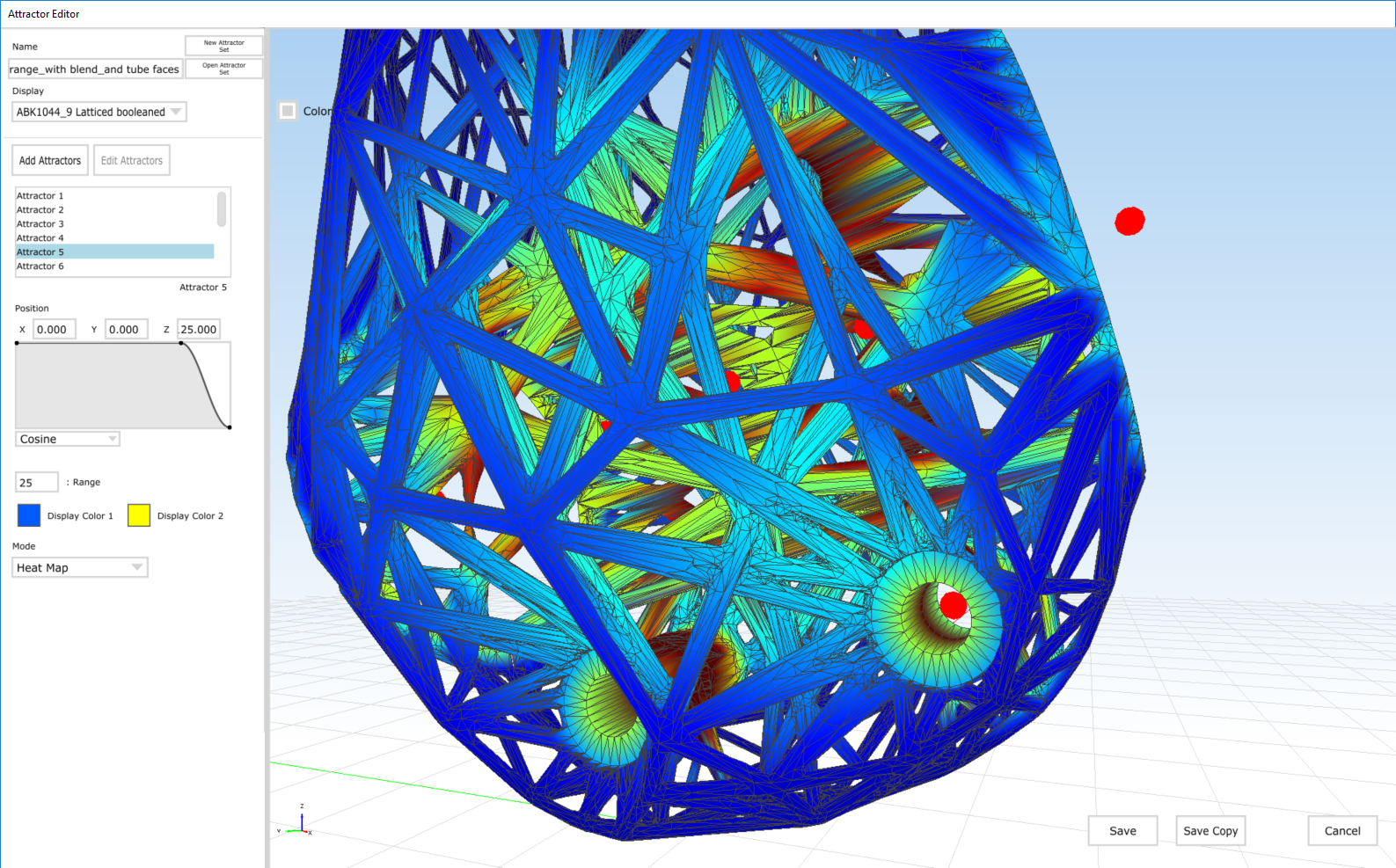

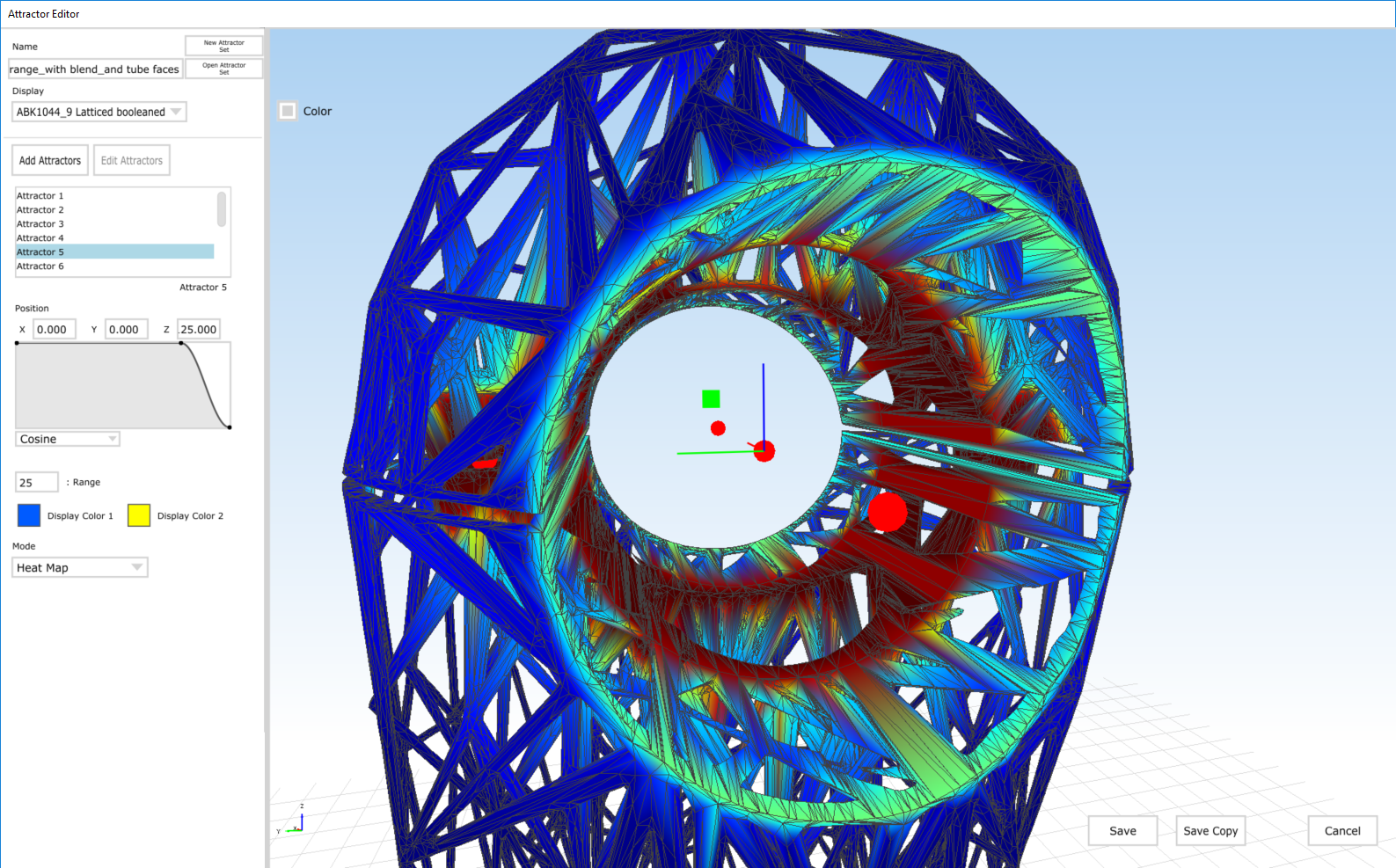

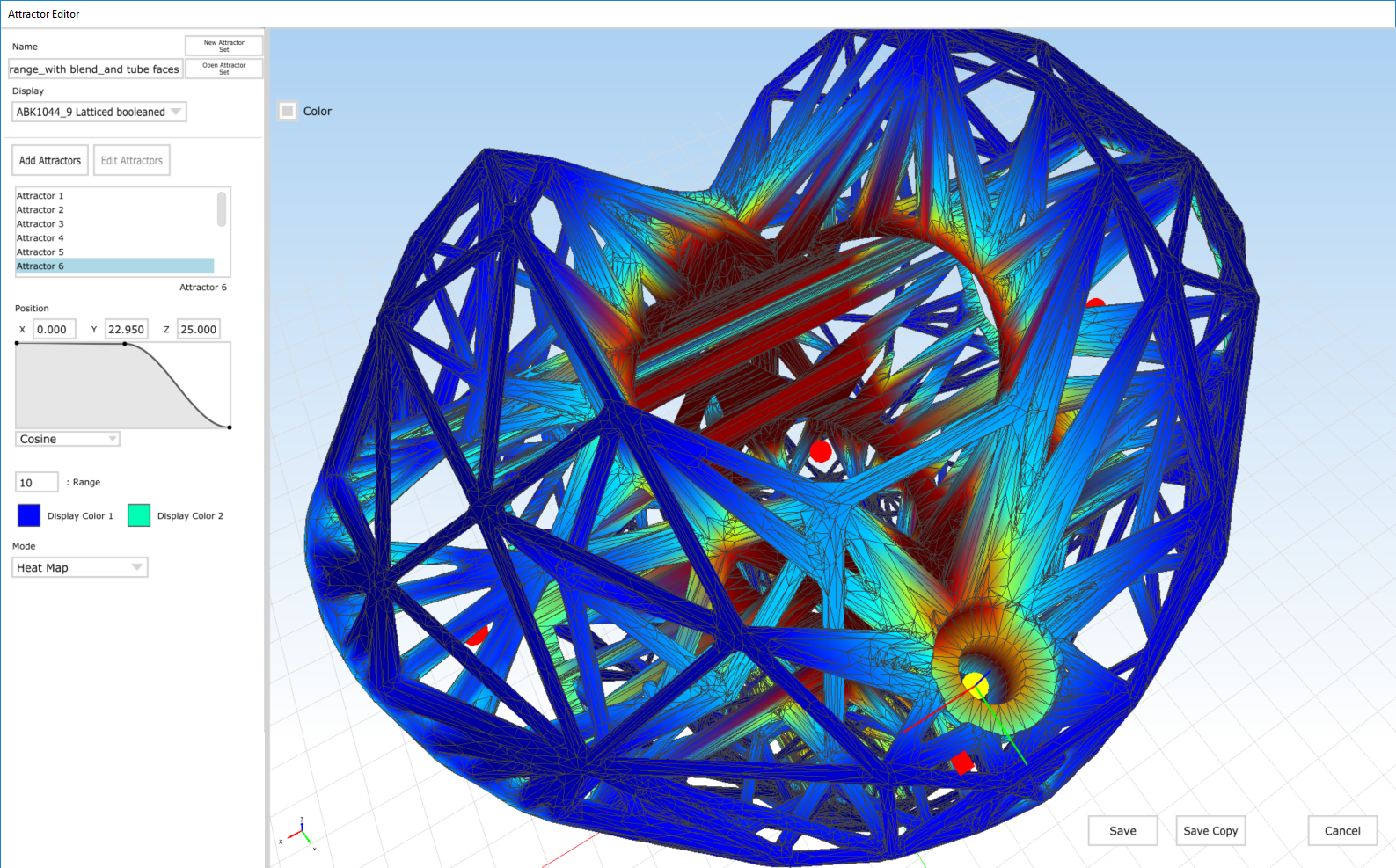

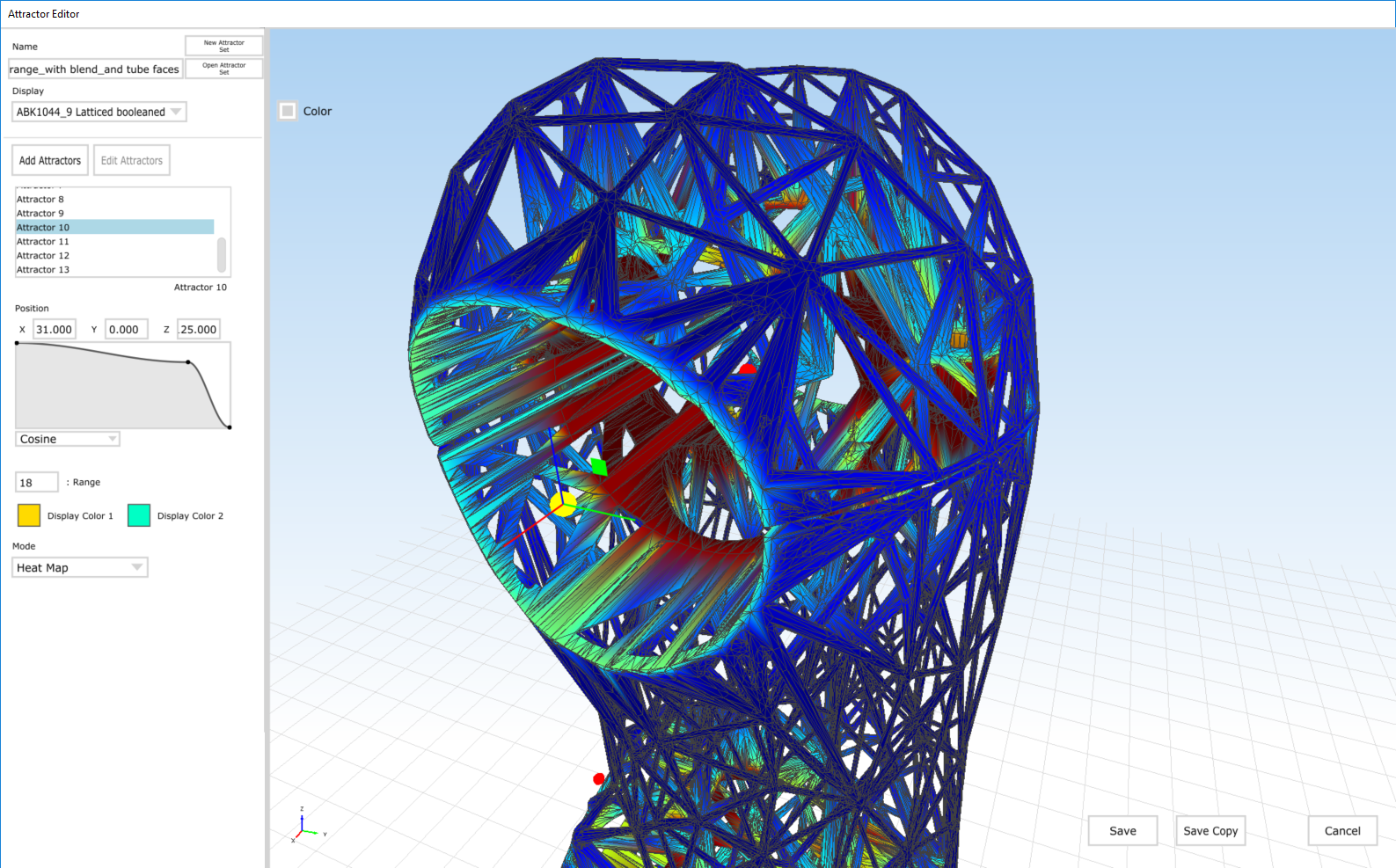

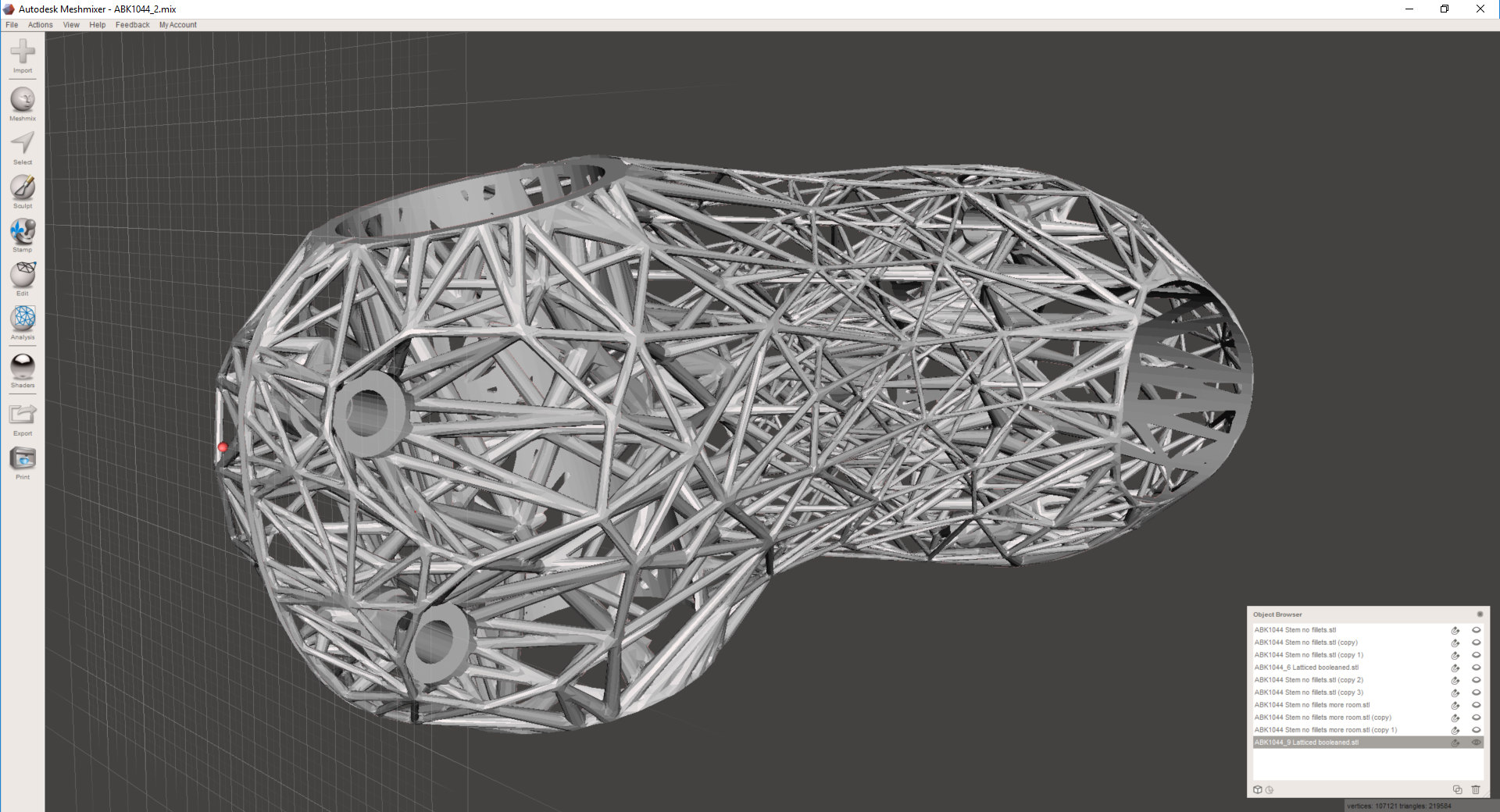

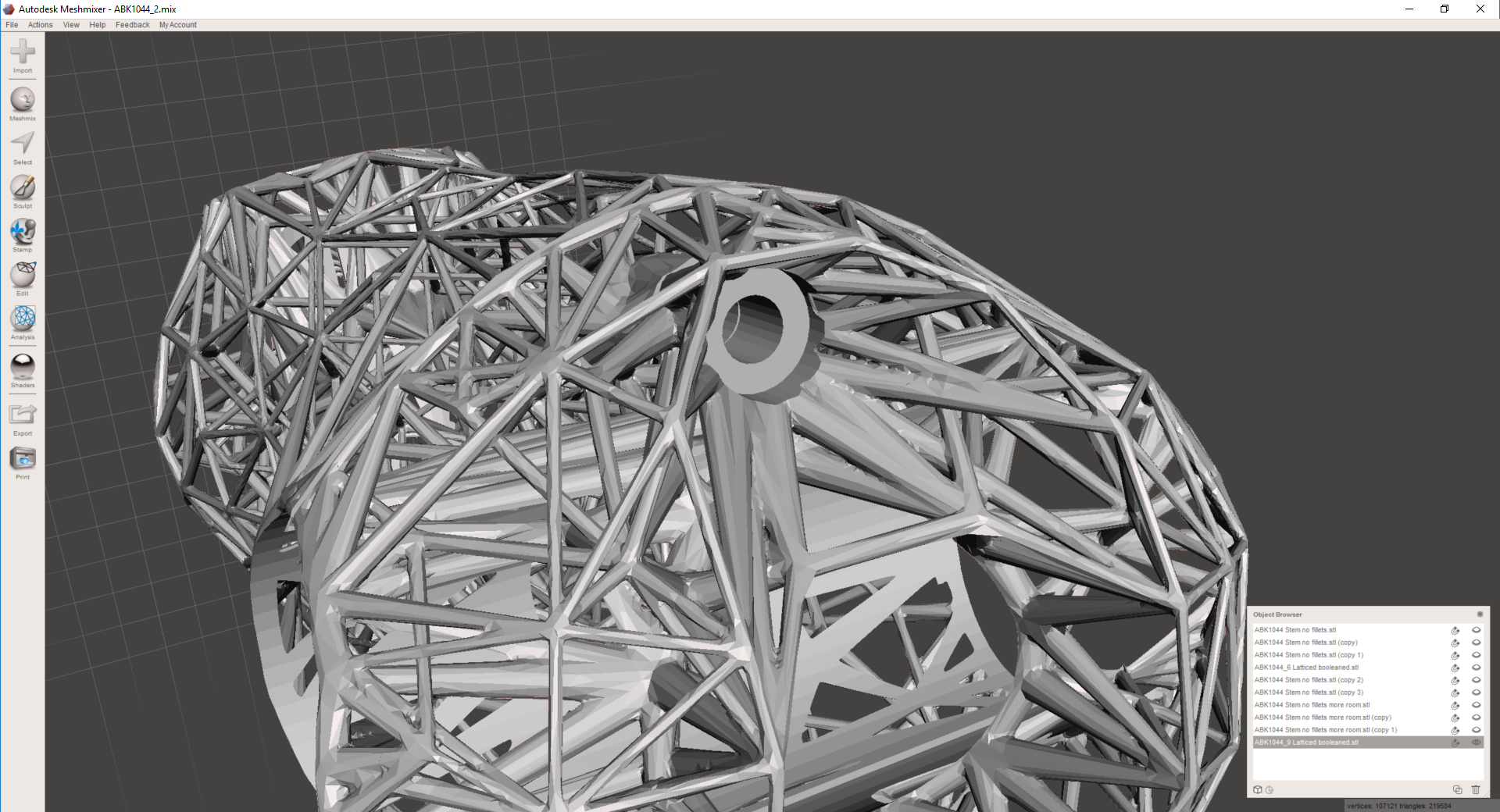

At nTopology, we know that Computer Aided Design will be an increasingly collaborative process. Our goal, then, is to integrate automation in the most useful ways possible - the ones that produce the most effective, efficient parts. I believe that keeping engineers fully in the loop is the way to do that - and am looking forward to making it happen.