The photos in this tweet have been public for over a year now. I've been aware of the project since last June; it was created by Arup, the fascinating global design firm (whose ownership structure is similarly fascinating). They needed a more efficient way to design and manufacture a whole series of nodes for a tensile structure, and for a variety of reasons (including, if I recall correctly, the fact that each node was both unique and difficult to manufacture conventionally) they decided to try out additive manufacturing. As it happens, I was lucky enough to speak to the designer (Salomé Galjaard) by phone a few months ago, and enjoyed hearing about the way they're thinking of applying AM to large construction projects.

In short: I'm a fan of the project, and love to see it get more exposure. There's something about the particular wording of Jo Liss's tweet, though, that is strange to me. Specifically, I find myself asking whether a computer did, indeed, design the new nodes.

(Note: I don't know Jo Liss and don't mean to be overly critical of her choice of wording; it's simply a jumping off point for some things I've been mulling over. I also don't believe that I have any proprietary or particularly insightful information about how Arup went about designing or manufacturing the nodes in question.)

As far as I can tell, Arup's process worked like so: Engineers modeled a design space, defined boundary conditions at the attachment points (which were predefined), and applied a number of loading conditions to the part. Here the story gets less clear; some reports mention topology optimization, and others say that Arup worked with Within (which is *not* topology optimization). My suspicion is that they used something like solidThinking Inspire to create a design concept, and then modeled the final part manually in SolidWorks or similar. Regardless, we can be nearly sure that the model that was printed was indeed designed by a human; that is, the actual shapes and curves we see in the part on the right were explicitly defined by an actual engineer, NOT by a piece of software. This is because nearly every engineered component in AEC needs to be documented using traditional CAD techniques, and neither Within nor solidThinking (nor most of the design optimization industry) supports CAD export. As a result, most parts that could be said to be "designed by a computer" are really merely sketched by a computer, while the actual design & documentation is done by a human.

This may seem like a small quibble, but it's far from trivial. Optimization (whether shape, topology, or parametric) software is expensive, and as a result most of the applications where it's being adopted involve expensive end products: airplanes, bridges, hip implants, and the like. Not coincidentally, those products tend to have stringent performance requirements - which themselves are often highly regulated. Regulation means documentation, and regulating bodies tend not to be (for totally legitimate reasons which are a bit beyond the scope of this blog post) particularly impressed with some computer generated concept model in STL or OBJ format. They want real CAD data, annotated by the designer and signed off by a string of his or her colleagues. And we simply haven't even started to figure out how to get a computer to do any of that stuff.

I'm reminded here also of something that I've spent a bunch of time considering over the past six months. The name "CAD" (for Computer Aided Design) implies that SolidWorks and Inventor and Siemens NX are actively helping humans design stuff. To me, this means making actual design decisions, like where to put a particular feature or what size and shape an object should be. But the vast majority of the time that isn't the case at all. Instead, traditional CAD packages are concerned primarily with helping engineers to document the decisions that they've already made.

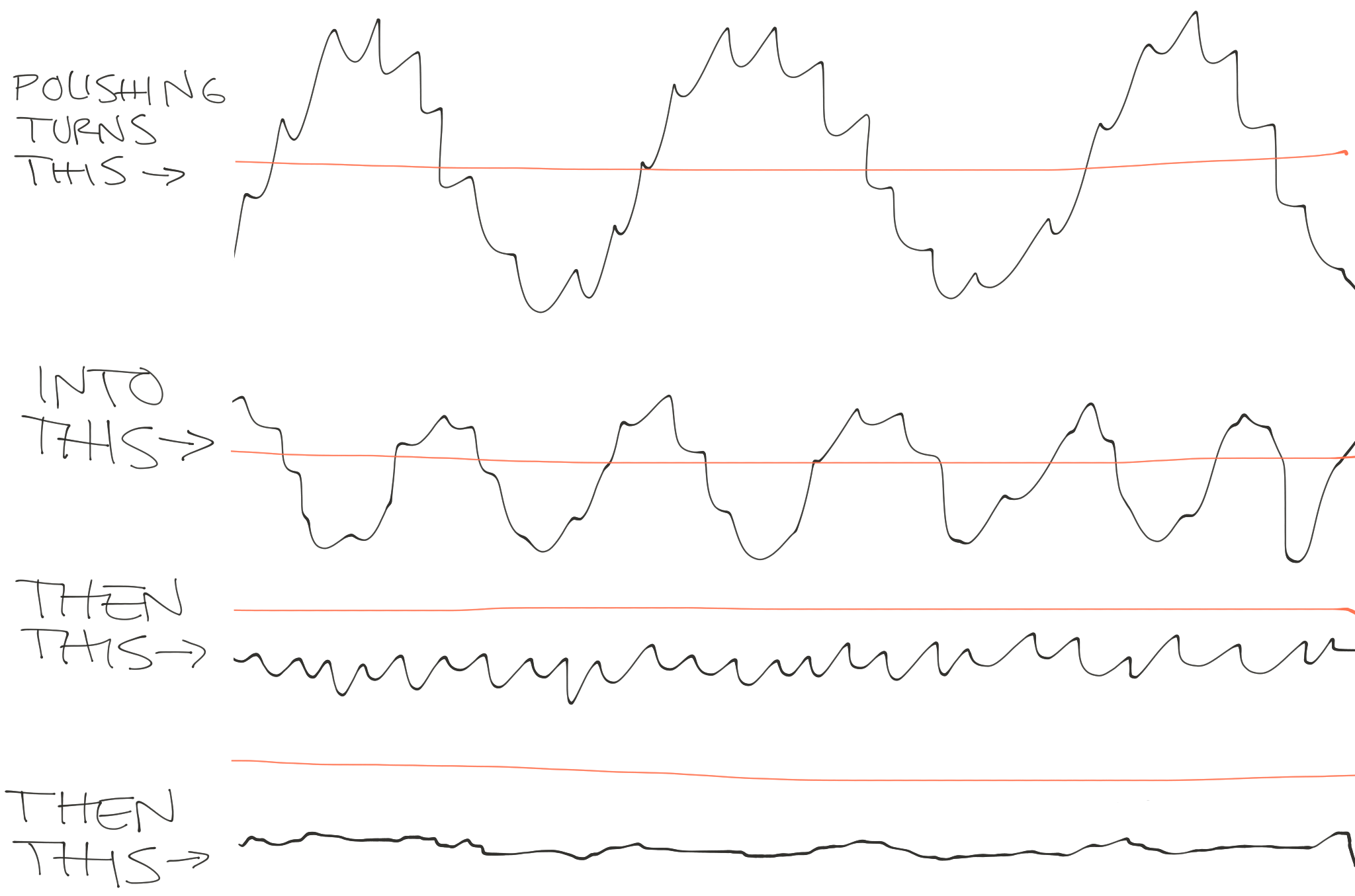

The implications of this are huge. Traditional CAD never had to find ways for the user to communicate design intent; they only needed to make it easy for me to, for instance, create a form that transitions seamlessly from one size and shape to another. For decades, that's been totally fine: the manufacturing methods that we had were primarily feature based, and the range of features that we've been good at making (by milling, turning, grinding, welding, etc) are very similar to the range of features that CAD packages were capable of documenting.

But additive manufacturing doesn't operate in terms of features. It deals with mass, and that mass is deposited layer by layer (with the exception of technologies like directed energy deposition, which is different in some ways but still not at all feature based). As a result, it becomes increasingly advantageous to work directly from design intent, and to optimize the design not feature by feature but instead holistically.

One major philosophical underpinning of most optimization software (like both Within and solidThinking Inspire) is that the process of optimizing mass distribution to meet some set of design intentions (namely mechanical strength and mass, though longtime readers of this blog will know that I feel that manufacturability, aesthetics, and supply chain complexity must be considered in this calculation as well) is a task better suited to software than to humans. To that effect, they are squarely opposed to the history of Computer Aided Documentation. They want CAD software to be making actual design decisions, presumably with the input and guidance of the engineer.

If it's not clear, I agree with the movement towards true computer aided design. But CAD vendors will need to overcome a number of roadblocks before I'd be comfortable saying that my computer designs anything in particular:

First, we need user interfaces that allow engineers to effectively communicate design intent. Traditional CAD packages never needed this, and optimization software has only just begun the task of rethinking how engineers tell their computers what kind of decisions they need them to make.

Second, we need to expand the number of variables we're optimizing for. Ultimately I believe this means iteratively focusing on one or two variables at a time, as the curse of dimensionality will make high dimensional optimization impractical for the foreseeable future. It's because of this that I'm bullish on parametric lattice optimization (and nTopology), which optimizes strength and weight on lattice structures that are (given input from the engineer) inherently manufacturable and structurally efficient.

Third, we need a new paradigm for documentation. This is for a few reasons. To start, the kinds of freeform & lattice structures that additive manufacturing can produce don't lend themselves to traditional three view 2D drawings. But in addition, there's a growing desire [citation needed] within engineering organizations to unify the design and documentation processes in some way - to make the model itself into a repository for its own design documentation.

These are big, difficult problems. But they're incredibly important to the advancement of functionally driven design, and to the integration of additive manufacturing's advantages (which are significant) into high value industries. And with some dedicated work by people across advanced design and manufacturing, I hope to see substantive progress soon :)